Ob quick start (#499)

* Add Chapter One of the tutorial. * Updated some descriptions. * Added some urls. * Add chapter02 : How to deploy an oceanbase-ce cluster * Add all chapters of the tutorial. Waiting for review. * Modifiy some files as the first preview suggested. * Modifiy some files as the first preview suggested. * I temporarily remove some chapters for this PR, I will add them back in the later PR. * change OB to OceanBase. * 1.Remove pictures. 2. Add some description about Prometheus and Grafana. * Relocate the images. * Change OceanBase Deploy to OBD. * Fixed some format errors.

This commit is contained in:

154

docs/docs/junior-training/ob-quick-start/chapter01/1.md

Normal file

154

docs/docs/junior-training/ob-quick-start/chapter01/1.md

Normal file

@ -0,0 +1,154 @@

|

||||

# 第 1 章:OceanBase 数据库概述

|

||||

|

||||

OceanBase 数据库是一个原生的分布式关系数据库,它是完全由阿里巴巴和蚂蚁集团自主研发的项目。OceanBase 数据库构建在通用服务器集群上,基于 Paxos 协议和分布式架构,提供金融级高可用和线性伸缩能力,不依赖特定硬件架构,具备高可用、线性扩展、高性能、低成本等核心技术优势。

|

||||

|

||||

OceanBase 数据库具有如下特点:

|

||||

|

||||

+ 高可用

|

||||

单服务器故障能够自愈,支持跨城多机房容灾,数据零丢失,可满足金融行业 6 级容灾标准(RPO=0,RTO<=30 秒)。

|

||||

+ 线性扩展

|

||||

透明扩展,自动负载均衡,应用透明的水平扩展,集群规模可超过 1500 节点,数据量可达 PB 级,单表记录万亿行。

|

||||

+ MySQL/ORACLE 高度兼容

|

||||

社区版兼容 MySQL 协议、语法和使用习惯,MySQL 客户端工具可以直接访问 OceanBase 数据库。MySQL 从 5.6 开始。

|

||||

企业版兼容 MySQL、ORACLE 协议。ORACLE 从 ORACLE 11g 开始兼容。需要使用 OceanBase 自己的驱动才可以访问 OceanBase 的 ORACLE 租户。

|

||||

+ 高性能

|

||||

准内存级数据变更操作、独创的编码压缩技术,结合线性水平扩展,TPC-C 测试达到 7.07 亿 tpmC。

|

||||

+ 低成本

|

||||

使用 PC 服务器和低端 SSD,高存储压缩率降低存储成本,高性能降低计算成本,多租户混部充分利用系统资源。

|

||||

+ 多租户

|

||||

原生支持多租户构架,同一套数据库集群可以为多个独立业务提供服务,租户间数据隔离,降低部署和运维成本。

|

||||

|

||||

OceanBase 数据库支持支付宝的全部核心业务,以及银行、保险、证券、运营商等多个行业的数百个客户的核心业务系统。

|

||||

|

||||

## OceanBase 发展历史

|

||||

|

||||

在使用 OceanBase 之前,我们先对 OceanBase 的历史做一个简单的了解。

|

||||

|

||||

|

||||

|

||||

+ 诞生 : 2010年,OceanBase 创始人阳振坤博士带领初创团队启动了 OceanBase 项目。第一个应用是淘宝的收藏夹业务。如今收藏夹依然是 OceanBase 的客户。收藏夹单表数据量非常大,OceanBase 用独创的方法解决了其高并发的大表连接小表的需求。

|

||||

+ 关系数据库 : 早期的版本中,应用通过定制的 API 库访问 OceanBase 。2012年,OceanBase 发布了支持 SQL 的版本,初步成为一个功能完整的通用关系数据库。

|

||||

+ 初试金融业务 : OceanBase 进入支付宝(后来的蚂蚁集团),开始应用于金融级的业务场景。2014年”双11“大促活动,OceanBase 开始承担交易库部分流量。此后,新成立的网商银行把所有核心交易库都运行在 OceanBase 上。

|

||||

+ 金融级核心库 : 2016年,OceanBase 发布了架构重新设计后的1.0版本,支持了分布式事务,提升了高并发写业务中的扩展,同时实现了多租户架构,这个整体架构延续至今。同时,到 2016 年”双11“时,支付宝全部核心库的业务流量 100% 运行在OceanBase ,包括交易、支付、会员和最重要的账务库。

|

||||

+ 走向外部市场 : 2017年,OceanBase 开始试点外部业务,成功应用于南京银行。

|

||||

+ 商业化加速 : 2018年,OceanBase 发布 2.0 版本,开始支持 Oracle 兼容模式。这一特性降低应用改造适配成本,在外部客户中快速推广开来。

|

||||

+ 登峰造极 : 2019年,OceanBase 2.2 版本参加代表 OLTP 数据库最权威的 TPC-C 评测,以 6000万 tpmC 的成绩登顶世界第一。随后,在 2020 年,又以 7亿 tpmC 刷新纪录,截止目前依然稳居第一。这充分证明了 OceanBase 优秀的扩展性和稳定性。OceanBase 是第一个也是截止目前唯一一个上榜 TPC-C 的中国数据库产品。

|

||||

+ HTAP 混合负载 : 2021年,OceanBase 3.0 基于全新的向量化执行引擎,在TPC-H 30000GB 的评测中以 1526 万 QphH 的成绩刷新了评测榜单。这标志着 OceanBase 一套引擎处理 AP 和 TP 混合负载的能力取得了基础性的突破。

|

||||

+ 开源开放 : 2021年六一儿童节,OceanBase宣布全面开源,开放合作,共建生态。

|

||||

|

||||

OceanBase 在 2021年6月份正式推出社区版并开放源码,版本从 3.1.0 开始,源码托管地址:[github.com/oceanbase](github.com/oceanbase) 。同时代码也同步发布到开源中国网站:[gitee.com/oceanbase](gitee.com/oceanbase) 。

|

||||

开源的内容包括:

|

||||

|

||||

+ 数据库内核 OceanBase

|

||||

+ 反向访问代理 `obproxy`

|

||||

+ 数据库客户端命令行工具 `obclient`

|

||||

+ 自动化部署工具 `OBD`

|

||||

+ c 语言驱动 `obconnector-c`

|

||||

+ CDC 组件 `oblogproxy` 和 `canal` 插件

|

||||

+ OB监控客户端组件 `obagent`

|

||||

+ spark 插件 `obspark`(待开源)

|

||||

|

||||

## OceanBase 业务案例

|

||||

|

||||

跟其他开源数据库不一样的地方是, OceanBase 先有企业版后有社区版。先有大企业商业版案例,再有社区版案例。社区版和企业版的核心能力是一样的。

|

||||

|

||||

典型客户如下:

|

||||

|

||||

+ 自用:蚂蚁集团(包括支付宝、网商银行)。

|

||||

+ 银行:中国工商银行;南京银行、东莞银行、天津银行、苏州银行;常熟农商行。

|

||||

+ 保险:中国人保、中华保险。

|

||||

+ 证券:招商证券、上投摩根。

|

||||

+ 非金融行业:浙江移动、山东移动;数字江西;中国石化。

|

||||

|

||||

详细案例请查看:`https://www.oceanbase.com/customer/home` 。更多行业和客户还没有在这里列出。

|

||||

|

||||

OceanBase 本质上是个单进程软件,独立部署,跟硬件、云平台没有绑定关系。可以部署在各个云厂商的云服务器上。OceanBase 在阿里云也有公有云数据库服务(`https://www.aliyun.com/product/oceanbase`)。

|

||||

|

||||

OceanBase 在公有云上(包括在 ECS 上独立部署的)客户案例有:

|

||||

|

||||

+ 中华联合财险

|

||||

+ 菲律宾版支付GCash

|

||||

+ 印度尼西亚电子钱包 DANA

|

||||

|

||||

## OceanBase 社区版简介

|

||||

|

||||

OceanBase 数据库社区版使用 [MulanPubL - 2.0 许可证](http://license.coscl.org.cn/MulanPubL-2.0/index.html) 。您可以免费复制及使用源代码。当您修改或分发源代码时,请遵守木兰协议。

|

||||

OceanBase 社区版官方网站地址是: [open.oceanbase.com](open.oceanbase.com) 。

|

||||

|

||||

### 下载方法

|

||||

|

||||

+ 官网下载:[https://open.oceanbase.com/softwareCenter/community](https://open.oceanbase.com/softwareCenter/community)

|

||||

+ GitHub 下载:[https://github.com/oceanbase/oceanbase/releases/](https://github.com/oceanbase/oceanbase/releases/)

|

||||

+ 阿里云 Yum 源:[https://mirrors.aliyun.com/oceanbase/OceanBase.repo](https://mirrors.aliyun.com/oceanbase/OceanBase.repo)

|

||||

|

||||

### 支持的操作系统

|

||||

|

||||

OceanBase 社区版支持的操作系统包括:

|

||||

|

||||

+ CentOS :推荐7.2 以后版本。

|

||||

+ Debian :推荐 9.8, 10.9 版本。

|

||||

+ openSUSE :推荐 15.2 版本。

|

||||

+ OpenAnolis:推荐 8.2 版本。

|

||||

+ SUSE : 推荐 15.2 版本。

|

||||

+ Ubuntu:推荐 16.04 、18.04、20.04 等版本。

|

||||

|

||||

### 跟MySQL 数据库的不同

|

||||

|

||||

OceanBase 社区版兼容 MySQL 语法功能(主要是 5.6 的绝大部分语法,部分 8.0 的新特性等),底层原理跟 MySQL 完全没有关系,不依赖开源 MySQL 组件,没有 InnoDB 引擎等。

|

||||

OceanBase 自身的存储引擎相比 MySQL 的存储,空间压缩效果更明显,社区版的压缩效果可以做到 MySQL 空间的四分之一。

|

||||

|

||||

OceanBase 是分布式数据库集群产品,生产环境默认数据三副本,并且三副本之前同步协议不是异步同步、半同步同步技术,而是使用 Paxos 协议同步事务日志。OceanBase 集群可以跨机房跨城市部署,机器或者机房故障时,集群内部多副本自动切换,不丢数据。OceanBase 天然适合两地三中心异地容灾和多活建设。

|

||||

|

||||

OceanBase 集群支持多租户(也叫多实例),所有的租户按需分配,弹性伸缩,具备高可用能力,类似云数据库服务。运维人员只需要维护少数几套集群,就可以提供很多实例给业务使用,易用性非常好。

|

||||

|

||||

OceanBase 支持水平拆分技术,具体就是分区表,不需要分库分表,SQL 和事务对业务完全透明,功能上没有限制。分区表线性扩展性也很好,目前已知案例最大单租户节点规模是 1500 台。

|

||||

|

||||

OceanBase 的 SQL 引擎能力远比 MySQL 功能强大,支持 SQL 解析和执行计划缓存,支持复杂的 SQL 运算,支持大纲技术干预 SQL 执行计划等。同时一套 SQL 引擎 一个数据源 同时支持 OLTP 和 ROLAP 类型的混合场景需求。即通常说的 HTAP 能力。

|

||||

|

||||

### 社区版核心功能

|

||||

|

||||

OceanBase 社区版包含 OceanBase 企业版的所有核心功能,如下:

|

||||

|

||||

+ 多副本高可用、强同步能力。

|

||||

+ 多租户能力。

|

||||

+ 在线弹性伸缩能力。

|

||||

+ 异地容灾/多活能力(包括两地三中心、三地五中心等)。

|

||||

+ 分区表、复制表等分布式能力。

|

||||

+ HTAP 能力。

|

||||

+ MySQL 兼容性。

|

||||

+ 备份恢复能力。

|

||||

+ CDC 能力。

|

||||

|

||||

OceanBase 社区版跟企业版的差异在于企业版会包含更多高级功能。如商业特性兼容、图形化管理工具、操作审计、安全加密、高可用扩展等。有关企业版信息请查看企业版官方网站([`oceanbase.com`](oceanbase.com))。

|

||||

|

||||

## 适合社区版的业务场景

|

||||

|

||||

+ MySQL 5.6/5.7 实例规模很大的场景。

|

||||

|

||||

MySQL 实例规模大,需要自动化运维平台。自动化运维平台在处理 MySQL 异常宕机切换和主备不一致问题时很可能需要 DBA 介入。高可用和强一致问题是MySQL 最大的风险。

|

||||

OceanBase 的多租户、高可用和强一致能力可以彻底解决这个痛点。

|

||||

|

||||

+ MySQL 5.6/5.7 数据量非常大存储成本高的场景。

|

||||

|

||||

MySQL 业务数据量增长到 几T 以上时,查询和读写性能可能会下降,大表 DDL 时间变长风险增加。单机磁盘容量可能到达扩容瓶颈。

|

||||

OceanBase MySQL租户的在线 DDL,数据存储高压缩比可以解决这些痛点。

|

||||

|

||||

+ 业务访问压力大或者变化大的场景。

|

||||

|

||||

业务访问压力大,基于MySQL 改造的分布式数据库中间件产品能一定程度分担业务压力和存储空间压力,但是缺乏跨节点的强一致性查询,以及需要分布式事务中间件协调事务,扩容的时候可能要数据逻辑拆分(俗称拆库拆表),运维成本高,风险高。

|

||||

OceanBase MySQL 租户提供分区表的水平拆分方案,提供原生的 SQL 和事务能力,对业务透明。并且支持在线扩容和缩容,内部数据迁移异步进行,具备高可用能力,不怕扩容和缩容过程中出现故障,可以解决上面这些痛点。

|

||||

|

||||

+ 交易数据库上的复杂查询场景。

|

||||

|

||||

交易数据库上有少量复杂的查询场景,涉及到的数据量很大,传统解决方案是通过数据同步到数据仓库进行查询。OceanBase 数据库的 SQL 引擎同时满足 OLTP 和 OLAP 场景,采用经过 ORACLE 复杂业务场景检验的先进的SQL优化器技术,能支持复杂的SQL优化和高效执行。因此可以在交易数据库上直接做复杂查询,减少不必要的数据同步。此外,OceanBase 还提供不同程度的读写分离技术来控制复杂查询对交易场景的影响。

|

||||

|

||||

其他更多场景待实践总结,敬请关注。

|

||||

|

||||

## 如何联系我们

|

||||

|

||||

欢迎广大 OceanBase 爱好者、用户和客户有任何问题联系我们反馈:

|

||||

|

||||

+ 企业版官网:[https://oceanbase.com](https://oceanbase.com) 。

|

||||

+ 社区版官网:[https://open.oceanbase.com](https://open.oceanbase.com) 。

|

||||

+ 社区版项目网站提 `Issue`:[https://github.com/oceanbase/oceanbase/issues](https://github.com/oceanbase/oceanbase/issues) 。

|

||||

+ 钉钉群:群号 `33254054` 。

|

||||

27

docs/docs/junior-training/ob-quick-start/chapter02/2.0.md

Normal file

27

docs/docs/junior-training/ob-quick-start/chapter02/2.0.md

Normal file

@ -0,0 +1,27 @@

|

||||

# 第 2 章:如何部署 OceanBase 社区版

|

||||

|

||||

本章主要介绍如何手动或自动部署 OceanBase 社区版集群,包括单副本和三副本集群。

|

||||

|

||||

## 本章目录

|

||||

|

||||

+ [部署准备](2.1.md)

|

||||

+ [如何快速体验 OB](2.2.md)

|

||||

+ [如何规划 OceanBa se集群部署](2.3.md)

|

||||

+ [如何初始化服务器环境](2.4.md)

|

||||

+ [如何安装 OBD 自动化部署软件](2.5.md)

|

||||

+ [如何使用 OBD 自动化部署单节点集群](2.6.md)

|

||||

+ [如何使用 OBD 自动化部署多节点集群](2.7.md)

|

||||

+ [如何查看和修改 OceanBase 集群参数](2.8.md)

|

||||

+ [如何部署 OBAgent](2.9.md)

|

||||

+ [如何重启 OceanBase 集群](2.10.md)

|

||||

+ [(高级)如何手动部署 OceanBase 集群](2.11.md)

|

||||

+ [常见问题](2.12.md)

|

||||

+ [附录](2.13.md)

|

||||

|

||||

## 如何联系我们

|

||||

|

||||

欢迎广大 OceanBase 爱好者、用户和客户有任何问题联系我们反馈:

|

||||

|

||||

+ 社区版官网论坛:[https://open.oceanbase.com/answer](https://open.oceanbase.com/answer) 。

|

||||

+ 社区版项目网站提 `Issue`:[https://github.com/oceanbase/oceanbase/issues](https://github.com/oceanbase/oceanbase/issues) 。

|

||||

+ 钉钉群:群号 `33254054` 。

|

||||

66

docs/docs/junior-training/ob-quick-start/chapter02/2.1.md

Normal file

66

docs/docs/junior-training/ob-quick-start/chapter02/2.1.md

Normal file

@ -0,0 +1,66 @@

|

||||

# 部署准备

|

||||

|

||||

OceanBase 是一个分布式集群产品,在生产环境至少是三台机器。学习环境可以部署单机版本。

|

||||

OceanBase 的部署跟传统数据库的部署有很多共同的地方,对操作系统硬件、软件设置、文件系统等会有一些最佳实践建议。那些是 OceanBase 发挥高性能稳定运行的基础。社区版也提供了工具能实现一定程度的自动化。

|

||||

|

||||

<!-- more -->

|

||||

|

||||

## 软件介绍

|

||||

|

||||

OceanBase 本质上是一个单进程的软件,可执行文件名叫 `observer` 。可以通过 RPM 包安装,也可以通过源码直接编译安装。本课程都是通过 RPM 包方式安装、

|

||||

|

||||

软件包下载地址有:

|

||||

|

||||

+ 官网下载:[https://open.oceanbase.com/softwareCenter/community](https://open.oceanbase.com/softwareCenter/community)

|

||||

+ GitHub 下载:[https://github.com/oceanbase/oceanbase/releases/](https://github.com/oceanbase/oceanbase/releases/)

|

||||

+ 阿里云 Yum 源:[https://mirrors.aliyun.com/oceanbase/OceanBase.repo](https://mirrors.aliyun.com/oceanbase/OceanBase.repo)

|

||||

|

||||

| 软件包名 | 进程名 | 软件用途 |

|

||||

|------------------------------------------|----------|------------------------------------|

|

||||

| oceanbase-ce-3.1.1-1.el7.x86_64.rpm | observer | oceanbase 数据库进程,常驻后台运行。 |

|

||||

| oceanbase-ce-libs-3.1.1-1.el7.x86_64.rpm | | 提供软件运行的 library,不运行。 |

|

||||

| obproxy-3.1.0-1.el7.x86_64.rpm | obproxy | oceanbase 访问反向代理,单进程,常驻后台运行。 |

|

||||

| ob-deploy-1.1.1-1.el7.x86_64 | obd | oceanbase 自动化部署软件,提供部署命令行,不常驻后台运行。 |

|

||||

| obclient-2.0.0-2.el8.x86_64.rpm | obclient| oceanbase 官方命令行客户端 |

|

||||

|

||||

注:版本号后期会变,以实际版本为主。

|

||||

|

||||

如果机器可以连公网,可以将阿里云 YUM 源添加到本地仓库,使用 yum 命令安装。

|

||||

|

||||

```bash

|

||||

yum install -y yum-utils

|

||||

yum-config-manager --add-repo https://mirrors.aliyun.com/oceanbase/OceanBase.repo

|

||||

yum -y install ob-deploy oceanbase obclient

|

||||

```

|

||||

|

||||

## 部署资源要求

|

||||

|

||||

OceanBase 数据库运行的时候会对主机资源有一些要求,主要是 CPU、内存和磁盘空间。安装 OceanBase 的目的不一样,对资源的要求也不一样。

|

||||

|

||||

| 目的 | CPU(核数) | 可用内存 | 磁盘 | 备注 |

|

||||

|-----|---------|------|----|----|

|

||||

| 功能学习 | 2 | 10G | 10G | 不初始化数据。 |

|

||||

| 性能测试 | 24 | 128G | SSD 500G以上 | 数据盘和日志盘要分开。|

|

||||

| 生产环境 | 32 | 256G | SSD 2T以上 | 数据盘和日志盘要分开。日志盘大小是内存的3-4倍。数据量增长的时候,数据盘大小也要增加。 |

|

||||

|

||||

注意:上面性能测试环境和生产环境的资源要求是建议。在社区版后续版本,会进一步降低对内存的要求。

|

||||

|

||||

OceanBase 对操作系统也有一些要求,目前支持下面这些系统:

|

||||

|

||||

+ Redhat / CentOS 7.x/8.x

|

||||

+ SUSE / OpenSUSE 15.x

|

||||

+ Anlios 7.x/8.x

|

||||

+ Debian 9.x

|

||||

+ Ubuntu 20.x

|

||||

|

||||

## 部署过程简介

|

||||

|

||||

自动化部署过程简单来说分为几步:

|

||||

|

||||

+ 初始化 OceanBase 各个节点环境。包括参数配置、文件系统目录设置等。

|

||||

+ 初始化中控机到OceanBase 各个节点的 SSH 免密登录。

|

||||

+ 准备 OBD 自动化部署配置文件。

|

||||

+ 使用 OBD 部署集群节点目录。

|

||||

+ 使用 OBD 启动并初始化集群。

|

||||

|

||||

后面还会详细介绍单节点和三节点集群的部署方法,以及手动部署的一些步骤。

|

||||

96

docs/docs/junior-training/ob-quick-start/chapter02/2.10.md

Normal file

96

docs/docs/junior-training/ob-quick-start/chapter02/2.10.md

Normal file

@ -0,0 +1,96 @@

|

||||

# 如何重启 OceanBase 集群

|

||||

|

||||

OB 自身并没有提供“重启集群”的命令。OB 的核心能力就是高可用,前提是三副本部署。当少数派节点故障时,OB 内部可能会自动切换,依然可以为业务提供读写服务。OB 提供了停止和启动某个副本(`zone` 级别或者 `server` 级别)的功能,并且只允许停止少数派节点。

|

||||

|

||||

所以,OB 集群的重启是靠外部操作。比如说用 `kill` 命令杀进程,然后再启动进程 `observer` 。

|

||||

|

||||

上面修改参数的时候已经演示了如何杀单副本集群里的节点进程,下面演示三副本集群里的重启集群方法。在生产环境为了尽可能的缩短集群不可用时间,重启集群采取一种保险的策略:按 `zone` 或 `server` 逐个重启集群节点。这个过程可能会比较长,持续几分钟到十几分钟。在刚开始学习 OceanBase 的时候,我们先掌握简单的重启方法,后面深入介绍 OceanBase 运维的时候,再介绍安全稳妥的重启方法。

|

||||

|

||||

## 直接手动重启 OceanBase 集群节点

|

||||

|

||||

|

||||

```bash

|

||||

# ssh 到 节点 1

|

||||

ssh 172.20.249.52

|

||||

# 正常 kill 进程,除非是测试用或者评估过风险,否则不要用 `kill -9` 。

|

||||

kill `pidof observer`

|

||||

# 等待 60s,等进程完全退出

|

||||

sleep 60

|

||||

# 反复确认进程完全退出

|

||||

ps -ef | grep observer

|

||||

# 配置 LIBRARY PATH

|

||||

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/oceanbase-ce/lib/

|

||||

# 启动进程

|

||||

cd /home/admin/oceanbase-ce && bin/observer

|

||||

# 等待 10s 进程启动

|

||||

sleep 10

|

||||

# 反复确认进程启动时没有退出

|

||||

ps -ef | grep observer | grep -v grep

|

||||

# 等待 60s,等进程完全启动并恢复完毕

|

||||

sleep 60

|

||||

# 查看进程监听成功(默认监听 2881 和 2882 端口)

|

||||

netstat -ntlp

|

||||

|

||||

# 在集群中查看节点状态(`status`)、开始服务时间(`start_service_time`)是否正常。

|

||||

select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free, round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb, usec_to_time(b.last_offline_time) last_offline_time, usec_to_time(b.start_service_time) start_service_time, b.status, usec_to_time(b.stop_time) stop_time, b.build_version from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port) order by a.zone, a.svr_ip;

|

||||

|

||||

```

|

||||

|

||||

只有第一个节点重启成功后,再重复操作第二个节点。

|

||||

当然,如果只是测试,不在乎可用性。就可以忽略上面的确认过程,直接杀掉所有集群节点的进程,然后启动进程。这个时候集群节点起来后也许要几分钟恢复数据和通信。如果集群重启之前有大量的数据读写,这个节点进程的恢复时间可能会很长,要十几分钟甚至几十分钟。

|

||||

|

||||

## 使用 OBD 重启集群

|

||||

|

||||

上面是手动重启 OceanBase 集群节点的原理,下面是使用 OBD 工具自动化做这个操作。但是注意,当前 OBD 的重启集群可能并没有包含必要的检查操作,所以,测试环境可以用,生产环境要谨慎使用。

|

||||

|

||||

使用 OBD 重启集群的命令是:`obd cluster restart ` 。

|

||||

|

||||

```bash

|

||||

obd cluster restart obce-3zones

|

||||

|

||||

输出:

|

||||

[admin@obce00 oceanbase-ce]$ obd cluster restart obce-3zones

|

||||

Get local repositories and plugins ok

|

||||

Open ssh connection ok

|

||||

Stop observer ok

|

||||

Stop obproxy ok

|

||||

obce-3zones stopped

|

||||

Get local repositories and plugins ok

|

||||

Open ssh connection ok

|

||||

Cluster param config check ok

|

||||

Check before start observer ok

|

||||

Check before start obproxy ok

|

||||

Start observer ok

|

||||

observer program health check ok

|

||||

Connect to observer ok

|

||||

Wait for observer init ok

|

||||

+-------------------------------------------------+

|

||||

| observer |

|

||||

+---------------+---------+------+-------+--------+

|

||||

| ip | version | port | zone | status |

|

||||

+---------------+---------+------+-------+--------+

|

||||

| 172.20.249.49 | 3.1.0 | 2881 | zone2 | active |

|

||||

| 172.20.249.51 | 3.1.0 | 2881 | zone3 | active |

|

||||

| 172.20.249.52 | 3.1.0 | 2881 | zone1 | active |

|

||||

+---------------+---------+------+-------+--------+

|

||||

|

||||

Start obproxy ok

|

||||

obproxy program health check ok

|

||||

Connect to obproxy ok

|

||||

Initialize cluster

|

||||

+-------------------------------------------------+

|

||||

| obproxy |

|

||||

+---------------+------+-----------------+--------+

|

||||

| ip | port | prometheus_port | status |

|

||||

+---------------+------+-----------------+--------+

|

||||

| 172.20.249.52 | 2883 | 2884 | active |

|

||||

| 172.20.249.49 | 2883 | 2884 | active |

|

||||

| 172.20.249.51 | 2883 | 2884 | active |

|

||||

+---------------+------+-----------------+--------+

|

||||

obce-3zones running

|

||||

```

|

||||

|

||||

上面 OBD 重启集群的时候,默认重启了所有组件(包括 `OBSERVER` 和 `OBPROXY` )。也可以通过 `-c `命令指定重启具体的组件。

|

||||

|

||||

有关 OBPROXY 的重启特点跟 OBSERVER 是一样的,也有工作目录和启动参数。这里就先不介绍了。后面在 OceanBase 的运维章节也会介绍 OBPROXY 的相关运维。

|

||||

|

||||

459

docs/docs/junior-training/ob-quick-start/chapter02/2.11.md

Normal file

459

docs/docs/junior-training/ob-quick-start/chapter02/2.11.md

Normal file

@ -0,0 +1,459 @@

|

||||

# (高级)如何手动部署 OceanBase 集群

|

||||

|

||||

当您熟悉了 OBD 部署的 OceanBase 集群方法原理后,就可以尝试手动部署一套 OceanBase 集群。这样的好处就是当 OBD 的功能不满足您的需求时,您可以自己写程序脚本做 OceanBase 集群的部署,或者在集群出异常的时候,能够手动做一些应急处理。

|

||||

|

||||

## 部署规划

|

||||

|

||||

这一节介绍 OceanBase 集群三节点手动部署方法,需要通过中控机直接远程登录到 OceanBase 节点上部署启动 `observer` 进程,并在中控机上部署 `obproxy` 进程。

|

||||

|

||||

+ 机器信息如下:

|

||||

|

||||

| 机器类型 | 云主机 ECS |

|

||||

|------|-------------------------------|

|

||||

| IP | 172.20.249.50 |

|

||||

| 网卡名 | eth0 |

|

||||

| OS | CentOS Linux release 8.4.2105 |

|

||||

| CPU | 4C |

|

||||

| 内存 | 总内存 14G,可用内存 11G |

|

||||

| 磁盘1 | 云盘 /dev/vda 100G |

|

||||

| 磁盘2 | 云盘 /dev/vdb 100G |

|

||||

|

||||

+ 机器划分如下:

|

||||

|

||||

| 角色 | 机器 | 备注 |

|

||||

|----------|---------------|---------------------|

|

||||

| OBD | 172.20.249.50 | 中控机,自动化部署软件 |

|

||||

| OBSERVER | 172.20.249.52 | OceanBase 数据库 zone1 |

|

||||

| | 172.20.249.49 | OceanBase 数据库 zone2 |

|

||||

| | 172.20.249.51 | OceanBase 数据库 zone3 |

|

||||

| OBPROXY | 172.20.249.50 | OceanBase 访问反向代理 |

|

||||

| OBCLIENT | 172.20.249.50 | OceanBase 命令行客户端 |

|

||||

|

||||

部署之前首先要初始化服务器环境,这个请参考前面章节《如何初始化服务器环境》。

|

||||

|

||||

+ 机器三节点之间时间同步检查

|

||||

|

||||

检查本机和目标节点时间误差常用命令是: ` clockdiff ` 。

|

||||

示例:

|

||||

|

||||

```bash

|

||||

[admin@obce02 oceanbase]$ sudo clockdiff 172.20.249.52

|

||||

[sudo] password for admin:

|

||||

.

|

||||

host=172.20.249.52 rtt=750(187)ms/0ms delta=0ms/0ms Sun Sep 12 14:52:24 2021

|

||||

[admin@obce02 oceanbase]$ sudo clockdiff 172.20.249.51

|

||||

.

|

||||

host=172.20.249.51 rtt=750(187)ms/0ms delta=0ms/0ms Sun Sep 12 14:52:30 2021

|

||||

```

|

||||

|

||||

可能会有些机器使用 `clockdiff` 会报错。此时可以换下面命令判断时间同步误差。

|

||||

|

||||

```bash

|

||||

[admin@obce02 oceanbase]$ ping -T tsandaddr 172.20.249.52 -c 2

|

||||

PING 172.20.249.52 (172.20.249.52) 56(124) bytes of data.

|

||||

64 bytes from 172.20.249.52: icmp_seq=1 ttl=64 time=0.161 ms

|

||||

TS: 172.20.249.49 24851014 absolute

|

||||

172.20.249.52 -1

|

||||

172.20.249.52 0

|

||||

172.20.249.49 1

|

||||

|

||||

64 bytes from 172.20.249.52: icmp_seq=2 ttl=64 time=0.172 ms

|

||||

TS: 172.20.249.49 24852054 absolute

|

||||

172.20.249.52 -1

|

||||

172.20.249.52 0

|

||||

172.20.249.49 1

|

||||

|

||||

```

|

||||

|

||||

三节点时间同步误差如果超过 50ms,则后面初始化集群一定会失败。

|

||||

这里还要留意节点的时间误差可能有个缓慢递增的特点,也许当前集群还能正常工作,一天后由于节点时间误差扩大到 50ms 以外,该节点就掉线了。

|

||||

|

||||

## 安装 OceanBase 软件包

|

||||

|

||||

手动部署,需要安装 OceanBase 的 OBSERVER 软件 。

|

||||

|

||||

```bash

|

||||

[admin@obce02 ~]$ ls -lrth /tmp/oceanbase-ce-*.rpm

|

||||

-rw-r--r-- 1 admin admin 45M Sep 12 13:36 /tmp/oceanbase-ce-3.1.0-3.el8.x86_64.rpm

|

||||

|

||||

[admin@obce02 ~]$ sudo rpm -ivh /tmp/oceanbase-ce-*.rpm

|

||||

warning: /tmp/oceanbase-ce-3.1.0-3.el8.x86_64.rpm: Header V4 RSA/SHA1 Signature, key ID e9b4a7aa: NOKEY

|

||||

Verifying... ################# [100%]

|

||||

Preparing... ################# [100%]

|

||||

Updating / installing...

|

||||

1:oceanbase-ce-libs-3.1.0-3.el8 ################# [ 50%]

|

||||

2:oceanbase-ce-3.1.0-3.el8 ################# [100%]

|

||||

|

||||

|

||||

```

|

||||

|

||||

软件包默认安装目录是 : `/home/admin/oceanbase` 。目录结构如下:

|

||||

|

||||

```bash

|

||||

[admin@obce01 ~]$ tree oceanbase

|

||||

oceanbase

|

||||

├── bin

|

||||

│ ├── import_time_zone_info.py

|

||||

│ └── observer

|

||||

├── etc

|

||||

│ └── timezone_V1.log

|

||||

└── lib

|

||||

├── libaio.so -> libaio.so.1.0.1

|

||||

├── libaio.so.1 -> libaio.so.1.0.1

|

||||

├── libaio.so.1.0.1

|

||||

├── libmariadb.so -> libmariadb.so.3

|

||||

└── libmariadb.so.3

|

||||

```

|

||||

|

||||

提示:您也可以对 RPM 包直接解压到指定目录,就不用安装到默认目录。

|

||||

|

||||

## (可选)清理目录和数据

|

||||

|

||||

**第一次部署不需要执行这步。**

|

||||

这步主要是用于后面安装部署失败后,需要清空目录和数据重新部署。

|

||||

|

||||

```bash

|

||||

kill -9 `pidof observer`

|

||||

/bin/rm -rf ~/oceanbase/store/obdemo/*/*

|

||||

```

|

||||

|

||||

检查目录结构,跟下面一致。

|

||||

|

||||

```bash

|

||||

tree ~/oceanbase/store/ /data/ /redo/

|

||||

|

||||

输出:

|

||||

[admin@obce02 ~]$ tree ~/oceanbase/store/ /data/ /redo/

|

||||

/home/admin/oceanbase/store/

|

||||

└── obdemo

|

||||

├── clog -> /redo/obdemo/clog

|

||||

├── etc2 -> /redo/obdemo/etc2

|

||||

├── etc3 -> /data/obdemo/etc3

|

||||

├── ilog -> /redo/obdemo/ilog

|

||||

├── slog -> /redo/obdemo/slog

|

||||

└── sstable -> /data/obdemo/sstable

|

||||

/data/

|

||||

└── obdemo

|

||||

├── etc3

|

||||

└── sstable

|

||||

/redo/

|

||||

└── obdemo

|

||||

├── clog

|

||||

├── etc2

|

||||

├── ilog

|

||||

└── slog

|

||||

|

||||

15 directories, 0 files

|

||||

```

|

||||

|

||||

## 初始化数据目录

|

||||

|

||||

**这一步只用于第一次部署时执行。如果是重复部署,目录已经创建的情况下,不需要再执行这一步。**

|

||||

手动部署时,OceanBase 节点上的相关目录都需要手动创建。

|

||||

|

||||

```bash

|

||||

su - admin

|

||||

mkdir -p ~/oceanbase/store/obdemo /data/obdemo/{sstable,etc3} /redo/obdemo/{clog,ilog,slog,etc2}

|

||||

for f in {clog,ilog,slog,etc2}; do ln -s /redo/obdemo/$f ~/oceanbase/store/obdemo/$f ; done

|

||||

for f in {sstable,etc3}; do ln -s /data/obdemo/$f ~/oceanbase/store/obdemo/$f; done

|

||||

|

||||

```

|

||||

|

||||

备注意:

|

||||

|

||||

+ 首先创建工作目录下的总数据目录 `~/oceanbase/store/obdemo` 、 数据文件目录 `/data/obdemo` 和日志相关目录 `/redo/obdemo` 。

|

||||

注意,跟使用 OBD 自动化部署的 OceanBase 节点目录稍微有点不一样的地方是我在目录里加入了集群名标识(`obdemo`)。

|

||||

+ 第二个不一样的地方是在 `~/oceanbase/store/obdemo` 是真实的目录,下面的子目录是映射到其他两个文件系统路径(指 `/data/` 和 `/redo/`)。生产环境要求这两个文件系统尽可能是两块独立的物理盘,或者最低要求是两个独立的逻辑盘。

|

||||

|

||||

我们看一下初始化后的目录结构。这个目录结构很重要,有时候进程 `observer` 启动失败就跟目录结构和权限不对有关。

|

||||

|

||||

```bash

|

||||

[admin@obce02 ~]$ tree ~/oceanbase/store/ /data/ /redo/

|

||||

/home/admin/oceanbase/store/

|

||||

└── obdemo

|

||||

├── clog -> /redo/obdemo/clog

|

||||

├── etc2 -> /redo/obdemo/etc2

|

||||

├── etc3 -> /data/obdemo/etc3

|

||||

├── ilog -> /redo/obdemo/ilog

|

||||

├── slog -> /redo/obdemo/slog

|

||||

└── sstable -> /data/obdemo/sstable

|

||||

/data/

|

||||

└── obdemo

|

||||

├── etc3

|

||||

└── sstable

|

||||

/redo/

|

||||

└── obdemo

|

||||

├── clog

|

||||

├── etc2

|

||||

├── ilog

|

||||

└── slog

|

||||

|

||||

15 directories, 0 files

|

||||

|

||||

```

|

||||

|

||||

## 启动 OBSERVER 进程

|

||||

|

||||

每个机器的启动参数大部分一样,只有少数不一样,需要特别留意。

|

||||

|

||||

+ `172.20.249.52`

|

||||

|

||||

```bash

|

||||

su - admin

|

||||

echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/oceanbase/lib' >> ~/.bash_profile

|

||||

. ~/.bash_profile

|

||||

|

||||

cd ~/oceanbase && bin/observer -i eth0 -p 2881 -P 2882 -z zone1 -d ~/oceanbase/store/obdemo -r '172.20.249.52:2882:2881;172.20.249.49:2882:2881;172.20.249.51:2882:2881' -c 20210912 -n obdemo -o "memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=50G,stack_size=1536K,config_additional_dir=/data/obdemo/etc3;/redo/obdemo/etc2" -d ~/oceanbase/store/obdemo

|

||||

|

||||

```

|

||||

|

||||

+ `172.20.249.49`

|

||||

|

||||

```bash

|

||||

su - admin

|

||||

echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/oceanbase/lib' >> ~/.bash_profile

|

||||

. ~/.bash_profile

|

||||

|

||||

cd ~/oceanbase && bin/observer -i eth0 -p 2881 -P 2882 -z zone2 -d ~/oceanbase/store/obdemo -r '172.20.249.52:2882:2881;172.20.249.49:2882:2881;172.20.249.51:2882:2881' -c 20210912 -n obdemo -o "memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=50G,stack_size=1536K,config_additional_dir=/data/obdemo/etc3;/redo/obdemo/etc2" -d ~/oceanbase/store/obdemo

|

||||

|

||||

|

||||

```

|

||||

|

||||

+ `172.20.249.51`

|

||||

|

||||

```bash

|

||||

su - admin

|

||||

echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/oceanbase/lib' >> ~/.bash_profile

|

||||

. ~/.bash_profile

|

||||

|

||||

cd ~/oceanbase && bin/observer -i eth0 -p 2881 -P 2882 -z zone3 -d ~/oceanbase/store/obdemo -r '172.20.249.52:2882:2881;172.20.249.49:2882:2881;172.20.249.51:2882:2881' -c 20210912 -n obdemo -o "memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=50G,stack_size=1536K,config_additional_dir=/data/obdemo/etc3;/redo/obdemo/etc2" -d ~/oceanbase/store/obdemo

|

||||

|

||||

```

|

||||

|

||||

如果三个节点机型都一致,那么启动参数里只有一个参数不一样,就是 `-z` 指定该节点是哪个 `zone` 。三个 `zone` 的三个节点初始化为一个三副本集群。后面 `-o` 参数不是必须的。这里主要是测试机器内存不足,所以需要指定一些影响内存的参数。如果您机器内存足够(如大于64G),则可以不需要 `-o` 参数部分。

|

||||

|

||||

检查三个节点进程启动正常,主要看端口监听是否正常。在中控机上批量查询

|

||||

|

||||

```bash

|

||||

[admin@obce00 oceanbase-ce]$ for OceanBase in $IPS;do echo $ob; ssh $ob "netstat -ntlp"; done

|

||||

172.20.249.52

|

||||

(Not all processes could be identified, non-owned process info

|

||||

will not be shown, you would have to be root to see it all.)

|

||||

Active Internet connections (only servers)

|

||||

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

|

||||

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

|

||||

tcp 0 0 0.0.0.0:2881 0.0.0.0:* LISTEN 10084/bin/observer

|

||||

tcp 0 0 0.0.0.0:2882 0.0.0.0:* LISTEN 10084/bin/observer

|

||||

172.20.249.49

|

||||

(Not all processes could be identified, non-owned process info

|

||||

will not be shown, you would have to be root to see it all.)

|

||||

Active Internet connections (only servers)

|

||||

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

|

||||

tcp 0 0 0.0.0.0:2881 0.0.0.0:* LISTEN 10213/bin/observer

|

||||

tcp 0 0 0.0.0.0:2882 0.0.0.0:* LISTEN 10213/bin/observer

|

||||

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

|

||||

172.20.249.51

|

||||

(Not all processes could be identified, non-owned process info

|

||||

will not be shown, you would have to be root to see it all.)

|

||||

Active Internet connections (only servers)

|

||||

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

|

||||

tcp 0 0 0.0.0.0:2881 0.0.0.0:* LISTEN 10103/bin/observer

|

||||

tcp 0 0 0.0.0.0:2882 0.0.0.0:* LISTEN 10103/bin/observer

|

||||

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

|

||||

|

||||

```

|

||||

|

||||

## 集群自举(初始化)

|

||||

|

||||

当 OceanBase 集群三个节点都正常启动,并且监听正常时,连接到任一节点(通过 2881 端口直连),进行自举(`bootstrap` 集群初始化)操作。

|

||||

初始密码是空。

|

||||

|

||||

```bash

|

||||

mysql -h 172.20.249.49 -u root -P 2881 -p -c -A

|

||||

|

||||

set session ob_query_timeout=1000000000; alter system bootstrap ZONE 'zone1' SERVER '172.20.249.52:2882', ZONE 'zone2' SERVER '172.20.249.49:2882', ZONE 'zone3' SERVER '172.20.249.51:2882' ;

|

||||

|

||||

输出:

|

||||

[admin@obce00 ~]$ mysql -h 172.20.249.49 -u root -P 2881 -p -c -A

|

||||

Enter password:

|

||||

Welcome to the MariaDB monitor. Commands end with ; or \g.

|

||||

Your MySQL connection id is 3221225472

|

||||

Server version: 5.7.25 OceanBase 3.1.0 (r3-b20901e8c84d3ea774beeaca963c67d7802e4b4e) (Built Aug 10 2021 08:10:38)

|

||||

|

||||

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

|

||||

|

||||

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

|

||||

|

||||

MySQL [(none)]> set session ob_query_timeout=1000000000; alter system bootstrap ZONE 'zone1' SERVER '172.20.249.52:2882', ZONE 'zone2' SERVER '172.20.249.49:2882', ZONE 'zone3' SERVER '172.20.249.51:2882' ;

|

||||

Query OK, 0 rows affected (0.001 sec)

|

||||

|

||||

Query OK, 0 rows affected (28.839 sec)

|

||||

|

||||

MySQL [(none)]> Bye

|

||||

[admin@obce00 ~]$ mysql -h 172.20.249.49 -u root@sys -P 2881 -p -c -A

|

||||

Enter password:

|

||||

Welcome to the MariaDB monitor. Commands end with ; or \g.

|

||||

Your MySQL connection id is 3221751629

|

||||

Server version: 5.7.25 OceanBase 3.1.0 (r3-b20901e8c84d3ea774beeaca963c67d7802e4b4e) (Built Aug 10 2021 08:10:38)

|

||||

|

||||

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

|

||||

|

||||

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

|

||||

|

||||

MySQL [(none)]> show databases;

|

||||

+--------------------+

|

||||

| Database |

|

||||

+--------------------+

|

||||

| oceanbase |

|

||||

| information_schema |

|

||||

| mysql |

|

||||

| SYS |

|

||||

| LBACSYS |

|

||||

| ORAAUDITOR |

|

||||

| test |

|

||||

+--------------------+

|

||||

7 rows in set (0.016 sec)

|

||||

```

|

||||

|

||||

通常来说,只要严格按照前面步骤设置目录结构和权限、启动参数,集群自举都能成功。如果不成功,常见原因如下:

|

||||

|

||||

+ 集群节点之间时间同步延时超过 50ms 。

|

||||

+ 集群节点之间网络延时超过 100ms 。

|

||||

+ 集群节点上 OBSERVER 相关目录结构不对或者目录权限不对。

|

||||

+ 集群节点上进程 `observer` 启动参数写的不对。注意隐含参数的名字(如`__min_full_resource_pool_memory` )、参数 `-d` 的目录是否正确、参数 `-z` 跟 IP 的对应关系、 参数中多了空格或分隔符错误(有的是 `,` ,有的是`;`)。

|

||||

+ 集群节点可用内存低于进程 `observer` 启动参数 `memory_limit` 值。

|

||||

|

||||

## 设置相关密码

|

||||

|

||||

+ 集群管理员(`root@sys`)密码

|

||||

默认集群管理员(`root@sys`)的密码是空的,这里需要设置一个密码。

|

||||

|

||||

```sql

|

||||

alter user root identified by '4S9wDbSr' ;

|

||||

```

|

||||

|

||||

+ OBPROXY 用户(`proxyro`)密码

|

||||

默认OBPROXY 连接 OceanBase 集群使用用户 `proxyro` 。这个用户不存在,需要创建。

|

||||

|

||||

```sql

|

||||

grant select on oceanbase.* to proxyro identified by 'SWoLCQRH' ;

|

||||

```

|

||||

|

||||

## 安装 OBPROXY 软件包

|

||||

|

||||

手动部署,需要安装 OceanBase 的 OBPROXY 软件 。

|

||||

|

||||

```bash

|

||||

sudo rpm -ivh /tmp/obproxy-3.1.0-1.el8.x86_64.rpm

|

||||

|

||||

```

|

||||

|

||||

社区版的 OBPROXY 软件默认安装到 `/home/admin/obproxy-版本号` 下。

|

||||

|

||||

```bash

|

||||

[admin@obce00 ~]$ tree ~/obproxy-3.1.0/

|

||||

/home/admin/obproxy-3.1.0/

|

||||

└── bin

|

||||

├── obproxy

|

||||

└── obproxyd.sh

|

||||

|

||||

1 directory, 2 files

|

||||

```

|

||||

|

||||

目前社区版的 OBPROXY 安装后的文件还是很简单的,后面可能会微调。

|

||||

|

||||

## 启动 OBPROXY 进程

|

||||

|

||||

启动 OBPROXY 进程也推荐在软件安装目录,进程 `pbproxy` 会在该目录下生成目录 `etc` 保存 OBPROXY 的运行参数,以及目录 `log` 保存运行日志。

|

||||

|

||||

```bash

|

||||

cd ~/obproxy-3.1.0/ && bin/obproxy -r "172.20.249.52:2881;172.20.249.49:2881;172.20.249.51:2881" -p 2883 -o "enable_strict_kernel_release=false,enable_cluster_checkout=false,enable_metadb_used=false" -c obdemo

|

||||

|

||||

输出:

|

||||

[admin@obce00 obproxy-3.1.0]$ cd ~/obproxy-3.1.0/ && bin/obproxy -r "172.20.249.52:2881;172.20.249.49:2881;172.20.249.51:2881" -p 2883 -o "enable_strict_kernel_release=false,enable_cluster_checkout=false,enable_metadb_used=false" -c obdemo

|

||||

bin/obproxy -r 172.20.249.52:2881;172.20.249.49:2881;172.20.249.51:2881 -p 2883 -o enable_strict_kernel_release=false,enable_cluster_checkout=false,enable_metadb_used=false -c obdemo

|

||||

rs list: 172.20.249.52:2881;172.20.249.49:2881;172.20.249.51:2881

|

||||

listen port: 2883

|

||||

optstr: enable_strict_kernel_release=false,enable_cluster_checkout=false,enable_metadb_used=false

|

||||

cluster_name: obdemo

|

||||

[admin@obce00 obproxy-3.1.0]$ ps -ef|grep obproxy

|

||||

admin 38206 1 2 15:11 ? 00:00:00 bin/obproxy -r 172.20.249.52:2881;172.20.249.49:2881;172.20.249.51:2881 -p 2883 -o enable_strict_kernel_release=false,enable_cluster_checkout=false,enable_metadb_used=false -c obdemo

|

||||

admin 38229 28904 0 15:11 pts/2 00:00:00 grep --color=auto obproxy

|

||||

[admin@obce00 obproxy-3.1.0]$

|

||||

```

|

||||

|

||||

+ 检查OBPROXY 监听正常

|

||||

|

||||

进程 `obproxy` 默认会监听2个端口:2883 和 2884 。

|

||||

|

||||

```bash

|

||||

[admin@obce00 obproxy-3.1.0]$ netstat -ntlp |grep obproxy

|

||||

(Not all processes could be identified, non-owned process info

|

||||

will not be shown, you would have to be root to see it all.)

|

||||

tcp 0 0 0.0.0.0:2883 0.0.0.0:* LISTEN 38206/bin/obproxy

|

||||

tcp 0 0 0.0.0.0:2884 0.0.0.0:* LISTEN 38206/bin/obproxy

|

||||

|

||||

```

|

||||

|

||||

+ 登录 OBPROXY 修改密码

|

||||

|

||||

登录用户名:`root@proxysys`, 端口:`2883` ,初始密码:空。

|

||||

|

||||

```bash

|

||||

mysql -h 172.20.249.50 -u root@proxysys -P 2883 -p

|

||||

|

||||

MySQL [(none)]> show proxyconfig like '%sys_password%';

|

||||

+-----------------------+-------+--------------------------------+-------------+---------------+

|

||||

| name | value | info | need_reboot | visible_level |

|

||||

+-----------------------+-------+--------------------------------+-------------+---------------+

|

||||

| observer_sys_password | | password for observer sys user | false | SYS |

|

||||

| obproxy_sys_password | | password for obproxy sys user | false | SYS |

|

||||

+-----------------------+-------+--------------------------------+-------------+---------------+

|

||||

2 rows in set (0.000 sec)

|

||||

|

||||

```

|

||||

|

||||

修改 OBPROXY 用户密码是通过修改参数的方式,命令是:`alter proxyconfig set` 。

|

||||

|

||||

```sql

|

||||

alter proxyconfig set obproxy_sys_password = 'wPhGddup' ;

|

||||

```

|

||||

|

||||

同时还需要修改 OBPROXY 连接 OceanBase 集群用户 `proxyro` 的密码。这样 OBPROXY 才能跟 OceanBase 集群正常连接。这个密码就是前面 OceanBase 集群初始化后创建的用户 `proxyro` 的密码。

|

||||

|

||||

```sql

|

||||

alter proxyconfig set observer_sys_password = 'SWoLCQRH' ;

|

||||

```

|

||||

|

||||

退出,通过 OBPROXY 连接 OceanBase 集群看看, 如果能查看所有会话,则说明 OBPROXY 部署成功。

|

||||

|

||||

```bash

|

||||

mysql -h172.20.249.50 -uroot@sys#obdemo -P2883 -p4S9wDbSr -c -A oceanbase

|

||||

|

||||

输出:

|

||||

[admin@obce00 obproxy-3.1.0]$ mysql -h172.20.249.50 -uroot@sys#obdemo -P2883 -p4S9wDbSr -c -A oceanbase

|

||||

Welcome to the MariaDB monitor. Commands end with ; or \g.

|

||||

Your MySQL connection id is 5

|

||||

Server version: 5.6.25 OceanBase 3.1.0 (r3-b20901e8c84d3ea774beeaca963c67d7802e4b4e) (Built Aug 10 2021 08:10:38)

|

||||

|

||||

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

|

||||

|

||||

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

|

||||

|

||||

MySQL [oceanbase]> show processlist;

|

||||

+------+--------+------+---------------------+-----------+-------------+-------------------+-------------------+-------+-------+

|

||||

| Id | Tenant | User | Host | db | trans_count | svr_session_count | state | tid | pid |

|

||||

+------+--------+------+---------------------+-----------+-------------+-------------------+-------------------+-------+-------+

|

||||

| 5 | sys | root | 172.20.249.50:41524 | oceanbase | 0 | 1 | MCS_ACTIVE_READER | 38206 | 38206 |

|

||||

+------+--------+------+---------------------+-----------+-------------+-------------------+-------------------+-------+-------+

|

||||

1 row in set (0.000 sec)

|

||||

|

||||

MySQL [oceanbase]> show full processlist;

|

||||

+------------+---------+--------+---------------------+-----------+---------+------+--------+-----------------------+---------------+------+--------------+

|

||||

| Id | User | Tenant | Host | db | Command | Time | State | Info | Ip | Port | Proxy_sessid |

|

||||

+------------+---------+--------+---------------------+-----------+---------+------+--------+-----------------------+---------------+------+--------------+

|

||||

| 3222013775 | root | sys | 172.20.249.50:57436 | oceanbase | Query | 0 | ACTIVE | show full processlist | 172.20.249.51 | 2881 | 4 |

|

||||

| 3221751633 | proxyro | sys | 172.20.249.50:49344 | oceanbase | Sleep | 2 | SLEEP | NULL | 172.20.249.49 | 2881 | 3 |

|

||||

+------------+---------+--------+---------------------+-----------+---------+------+--------+-----------------------+---------------+------+--------------+

|

||||

2 rows in set (0.022 sec)

|

||||

```

|

||||

138

docs/docs/junior-training/ob-quick-start/chapter02/2.12.md

Normal file

138

docs/docs/junior-training/ob-quick-start/chapter02/2.12.md

Normal file

@ -0,0 +1,138 @@

|

||||

# 常见问题

|

||||

|

||||

## 机器环境初始化问题

|

||||

|

||||

## `ulimit` 设置不生效

|

||||

|

||||

+ 现象

|

||||

|

||||

```bash

|

||||

ulimit -a

|

||||

...

|

||||

stack size (kbytes, -s) 1024

|

||||

...

|

||||

```

|

||||

|

||||

此时,使用 admin 用户通过 ulimit -s 命令修改栈大小,操作系统报错 `cannot modify limit: Operation not permitted` 。

|

||||

|

||||

`ulimit` 问题设置不对,可能导致进程 OBSERVER 无法启动。

|

||||

|

||||

+ 原因

|

||||

|

||||

admin 用户 ulimit 配置未生效的原因可能是由于操作系统关闭了 PAM,PAM 用于限制登录用户的 ulimit 配置,如果不开启 PAM,则会使用 SSHD 的默认值(即 1024)。

|

||||

|

||||

+ 解决办法

|

||||

|

||||

修改 SSHD 配置文件 `sshd_config` ,取消对 `UsePAM yes` 的注释。

|

||||

|

||||

```bash

|

||||

sudo vim /etc/ssh/sshd_config

|

||||

UsePAM yes

|

||||

|

||||

```

|

||||

|

||||

重启 SSHD 服务。

|

||||

|

||||

```bash

|

||||

sudo systemctl restart sshd

|

||||

```

|

||||

|

||||

再次修改 `ulimit.conf` 文件

|

||||

|

||||

```

|

||||

vim /etc/security/limits.conf

|

||||

* soft nofile 655360

|

||||

* hard nofile 655360

|

||||

* soft nproc 655360

|

||||

* hard nproc 655360

|

||||

* soft core unlimited

|

||||

* hard core unlimited

|

||||

* soft stack unlimited

|

||||

* hard stack unlimited

|

||||

```

|

||||

|

||||

重新登录检查实际值,用命令:`ulimit -a` 。

|

||||

|

||||

## OBD 部署问题

|

||||

|

||||

## 目录非空

|

||||

|

||||

+ 现象

|

||||

|

||||

```bash

|

||||

Initializes cluster work home x

|

||||

[ERROR] fail to init zone1(172.20.249.53) data path: /data is not empty

|

||||

```

|

||||

|

||||

+ 原因

|

||||

|

||||

CentOS 8.0 刚初始化的文件系统里目录里会有一个默认文件夹 `lost+found` 。

|

||||

|

||||

+ 解决办法

|

||||

|

||||

清空刚建的文件系统目录。

|

||||

|

||||

`sudo /bin/rm -rf /data/* /redo/*`

|

||||

|

||||

## 其他通用报错

|

||||

|

||||

+ 现象

|

||||

|

||||

`obd` 命令出错。

|

||||

|

||||

+ 原因

|

||||

|

||||

查看 `obd` 命令日志。

|

||||

|

||||

```bash

|

||||

vim ~/.obd/log/obd + R

|

||||

```

|

||||

|

||||

+ 解决办法

|

||||

|

||||

根据错误描述去解决。

|

||||

|

||||

## OBSERVER 启动失败

|

||||

|

||||

## 找不到共享库

|

||||

|

||||

+ 现象

|

||||

|

||||

手动启动进程 OBSERVER ,提示 共享库找不到。

|

||||

|

||||

```bash

|

||||

[admin@obce02 ~]$ cd oceanbase-ce/

|

||||

[admin@obce02 oceanbase-ce]$ bin/observer

|

||||

bin/observer: error while loading shared libraries: libmariadb.so.3: cannot open shared object file: No such file or directory

|

||||

```

|

||||

|

||||

+ 原因

|

||||

|

||||

没有将 OceanBase 的 LIB 加到环境变量 `LD_LIBRARY_PATH` 里。

|

||||

|

||||

LIB 目录如下:

|

||||

|

||||

```bash

|

||||

[admin@obce02 ~]$ tree oceanbase-ce/

|

||||

oceanbase-ce/

|

||||

├── admin

|

||||

├── bin

|

||||

│ └── observer -> /home/admin/.obd/repository/oceanbase-ce/3.1.0/84bd2fe27f8b8243cc57d8a3f68b4c50f94aab80/bin/observer

|

||||

<....>

|

||||

├── lib

|

||||

│ ├── libaio.so -> /home/admin/.obd/repository/oceanbase-ce/3.1.0/84bd2fe27f8b8243cc57d8a3f68b4c50f94aab80/lib/libaio.so

|

||||

│ ├── libaio.so.1 -> /home/admin/.obd/repository/oceanbase-ce/3.1.0/84bd2fe27f8b8243cc57d8a3f68b4c50f94aab80/lib/libaio.so.1

|

||||

│ ├── libaio.so.1.0.1 -> /home/admin/.obd/repository/oceanbase-ce/3.1.0/84bd2fe27f8b8243cc57d8a3f68b4c50f94aab80/lib/libaio.so.1.0.1

|

||||

│ ├── libmariadb.so -> /home/admin/.obd/repository/oceanbase-ce/3.1.0/84bd2fe27f8b8243cc57d8a3f68b4c50f94aab80/lib/libmariadb.so

|

||||

│ └── libmariadb.so.3 -> /home/admin/.obd/repository/oceanbase-ce/3.1.0/84bd2fe27f8b8243cc57d8a3f68b4c50f94aab80/lib/libmariadb.so.3

|

||||

```

|

||||

|

||||

+ 解决办法

|

||||

|

||||

将 OceanBase 的 LIB 加到环境变量 `LD_LIBRARY_PATH` 里。也可以写到 `.bash_profile` 中。

|

||||

|

||||

```bash

|

||||

echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/oceanbase-ce/lib/' >> ~/.bash_profile

|

||||

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/oceanbase-ce/lib/

|

||||

|

||||

```

|

||||

240

docs/docs/junior-training/ob-quick-start/chapter02/2.13.md

Normal file

240

docs/docs/junior-training/ob-quick-start/chapter02/2.13.md

Normal file

@ -0,0 +1,240 @@

|

||||

# 附录

|

||||

|

||||

## A1. 生产环境三节点 OceanBase 集群部署配置文件

|

||||

|

||||

生产环境机器内存大于 256G 时,参考下面配置文件。

|

||||

|

||||

```yaml

|

||||

# Only need to configure when remote login is required

|

||||

user:

|

||||

username: admin

|

||||

# password: your password if need

|

||||

key_file: /home/admin/.ssh/id_rsa.pub

|

||||

port: your ssh port, default 22

|

||||

# timeout: ssh connection timeout (second), default 30

|

||||

oceanbase-ce:

|

||||

servers:

|

||||

- name: obce01

|

||||

# Please don't use hostname, only IP can be supported

|

||||

ip: 172.20.249.53

|

||||

- name: obce02

|

||||

ip: 172.20.249.55

|

||||

- name: obce03

|

||||

ip: 172.20.249.56

|

||||

global:

|

||||

# Please set devname as the network adaptor's name whose ip is in the setting of severs.

|

||||

# if set severs as "127.0.0.1", please set devname as "lo"

|

||||

# if current ip is 192.168.1.10, and the ip's network adaptor's name is "eth0", please use "eth0"

|

||||

devname: bond0

|

||||

cluster_id: 2

|

||||

# please set memory limit to a suitable value which is matching resource.

|

||||

# memory_limit: 200G # The maximum running memory for an observer

|

||||

# system_memory: 30G # The reserved system memory. system_memory is reserved for general tenants. The default value is 30G.

|

||||

minor_freeze_times: 100

|

||||

minor_warm_up_duration_time: 0

|

||||

freeze_trigger_percentage: 40

|

||||

enable_merge_by_turn: FALSE

|

||||

datafile_disk_percentage: 50 # The percentage of the data_dir space to the total disk space. This value takes effect only when datafile_size is 0. The default value is 90.

|

||||

# datafile_size: 500G

|

||||

syslog_level: INFO # System log level. The default value is INFO.

|

||||

enable_syslog_wf: false # Print system logs whose levels are higher than WARNING to a separate log file. The default value is true.

|

||||

enable_syslog_recycle: true # Enable auto system log recycling or not. The default value is false.

|

||||

max_syslog_file_count: 50 # The maximum number of reserved log files before enabling auto recycling. The default value is 0.

|

||||

# observer cluster name, consistent with obproxy's cluster_name

|

||||

appname: obce-3zones

|

||||

root_password: 0EI5N08d # root user password, can be empty

|

||||

proxyro_password: uY7Yf8zx # proxyro user pasword, consistent with obproxy's observer_sys_password, can be empty

|

||||

obce01:

|

||||

mysql_port: 2881 # External port for OceanBase Database. The default value is 2881.

|

||||

rpc_port: 2882 # Internal port for OceanBase Database. The default value is 2882.

|

||||

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

|

||||

home_path: /home/admin/oceanbase-ce

|

||||

# The directory for data storage. The default value is $home_path/store.

|

||||

data_dir: /data

|

||||

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

|

||||

redo_dir: /redo

|

||||

zone: zone1

|

||||

obce02:

|

||||

mysql_port: 2881 # External port for OceanBase Database. The default value is 2881.

|

||||

rpc_port: 2882 # Internal port for OceanBase Database. The default value is 2882.

|

||||

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

|

||||

home_path: /home/admin/oceanbase-ce

|

||||

# The directory for data storage. The default value is $home_path/store.

|

||||

data_dir: /data

|

||||

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

|

||||

redo_dir: /redo

|

||||

zone: zone2

|

||||

obce03:

|

||||

mysql_port: 2881 # External port for OceanBase Database. The default value is 2881.

|

||||

rpc_port: 2882 # Internal port for OceanBase Database. The default value is 2882.

|

||||

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

|

||||

home_path: /home/admin/oceanbase-ce

|

||||

# The directory for data storage. The default value is $home_path/store.

|

||||

data_dir: /data

|

||||

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

|

||||

redo_dir: /redo

|

||||

zone: zone3

|

||||

obproxy:

|

||||

servers:

|

||||

- 172.20.249.53

|

||||

- 172.20.249.55

|

||||

- 172.20.249.56

|

||||

# Set dependent components for the component.

|

||||

# When the associated configurations are not done, OBD will automatically get the these configurations from the dependent components.

|

||||

depends:

|

||||

- oceanbase-ce

|

||||

global:

|

||||

listen_port: 2883 # External port. The default value is 2883.

|

||||

prometheus_listen_port: 2884 # The Prometheus port. The default value is 2884.

|

||||

home_path: /home/admin/obproxy

|

||||

# oceanbase root server list

|

||||

# format: ip:mysql_port;ip:mysql_port

|

||||

rs_list: 172.20.249.53:2881;172.20.249.55:2881;172.20.249.56:2881

|

||||

enable_cluster_checkout: false

|

||||

# observer cluster name, consistent with oceanbase-ce's appname

|

||||

cluster_name: obce-3zones

|

||||

obproxy_sys_password: 0MdTv1tm # obproxy sys user password, can be empty

|

||||

observer_sys_password: uY7Yf8zx # proxyro user pasword, consistent with oceanbase-ce's proxyro_password, can be empty

|

||||

```

|

||||

|

||||

## A2. 测试环境 3台ECS 模拟 6节点集群配置文件

|

||||

|

||||

每个机器起 2 个节点,分别监听 2881/2882 和 3881/3882 。

|

||||

|

||||

```yaml

|

||||

# Only need to configure when remote login is required

|

||||

user:

|

||||

username: admin

|

||||

# password: your password if need

|

||||

key_file: /home/admin/.ssh/id_rsa.pub

|

||||

port: your ssh port, default 22

|

||||

# timeout: ssh connection timeout (second), default 30

|

||||

oceanbase-ce:

|

||||

servers:

|

||||

- name: obce01

|

||||

# Please don't use hostname, only IP can be supported

|

||||

ip: 172.20.249.53

|

||||

- name: obce02

|

||||

ip: 172.20.249.55

|

||||

- name: obce03

|

||||

ip: 172.20.249.56

|

||||

- name: obce04

|

||||

# Please don't use hostname, only IP can be supported

|

||||

ip: 172.20.249.53

|

||||

- name: obce05

|

||||

ip: 172.20.249.55

|

||||

- name: obce06

|

||||

ip: 172.20.249.56

|

||||

global:

|

||||

# Please set devname as the network adaptor's name whose ip is in the setting of severs.

|

||||

# if set severs as "127.0.0.1", please set devname as "lo"

|

||||

# if current ip is 192.168.1.10, and the ip's network adaptor's name is "eth0", please use "eth0"

|

||||

devname: eth0

|

||||

cluster_id: 2

|

||||

# please set memory limit to a suitable value which is matching resource.

|

||||

memory_limit: 10G # The maximum running memory for an observer

|

||||

system_memory: 3G # The reserved system memory. system_memory is reserved for general tenants. The default value is 30G.

|

||||

stack_size: 512K

|

||||

cpu_count: 16

|

||||

cache_wash_threshold: 1G

|

||||

__min_full_resource_pool_memory: 268435456

|

||||

workers_per_cpu_quota: 10

|

||||

schema_history_expire_time: 1d

|

||||

# The value of net_thread_count had better be same as cpu's core number.

|

||||

net_thread_count: 4

|

||||

major_freeze_duty_time: Disable

|

||||

minor_warm_up_duration_time: 0

|

||||

freeze_trigger_percentage: 40

|

||||

enable_separate_sys_clog: 0

|

||||

enable_merge_by_turn: FALSE

|

||||

#datafile_disk_percentage: 20 # The percentage of the data_dir space to the total disk space. This value takes effect only when datafile_size is 0. The default value is 90.

|

||||

datafile_size: 50G

|

||||

syslog_level: WARN # System log level. The default value is INFO.

|

||||

enable_syslog_wf: false # Print system logs whose levels are higher than WARNING to a separate log file. The default value is true.

|

||||

enable_syslog_recycle: true # Enable auto system log recycling or not. The default value is false.

|

||||

max_syslog_file_count: 10 # The maximum number of reserved log files before enabling auto recycling. The default value is 0.

|

||||

# observer cluster name, consistent with obproxy's cluster_name

|

||||

appname: obce-3zones

|

||||

root_password: 0EI5N08d # root user password, can be empty

|

||||

proxyro_password: uY7Yf8zx # proxyro user pasword, consistent with obproxy's observer_sys_password, can be empty

|

||||

obce01:

|

||||

mysql_port: 2881 # External port for OceanBase Database. The default value is 2881.

|

||||

rpc_port: 2882 # Internal port for OceanBase Database. The default value is 2882.

|

||||

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

|

||||

home_path: /home/admin/oceanbase-ce

|

||||

# The directory for data storage. The default value is $home_path/store.

|

||||

data_dir: /data/1

|

||||

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

|

||||

redo_dir: /redo/1

|

||||

zone: zone1

|

||||

obce02:

|

||||

mysql_port: 2881 # External port for OceanBase Database. The default value is 2881.

|

||||

rpc_port: 2882 # Internal port for OceanBase Database. The default value is 2882.

|

||||

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

|

||||

home_path: /home/admin/oceanbase-ce

|

||||

# The directory for data storage. The default value is $home_path/store.

|

||||

data_dir: /data/1

|

||||

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

|

||||

redo_dir: /redo/1

|

||||

zone: zone2

|

||||

obce03:

|

||||

mysql_port: 2881 # External port for OceanBase Database. The default value is 2881.

|

||||

rpc_port: 2882 # Internal port for OceanBase Database. The default value is 2882.

|

||||

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

|

||||

home_path: /home/admin/oceanbase-ce

|

||||

# The directory for data storage. The default value is $home_path/store.

|

||||

data_dir: /data/1

|

||||

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

|

||||

redo_dir: /redo/1

|

||||

zone: zone3

|

||||

obce04:

|

||||

mysql_port: 3881 # External port for OceanBase Database. The default value is 2881.

|

||||

rpc_port: 3882 # Internal port for OceanBase Database. The default value is 2882.

|

||||

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

|

||||

home_path: /home/admin/oceanbase-ce2

|

||||

# The directory for data storage. The default value is $home_path/store.

|

||||

data_dir: /data/2

|

||||

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

|

||||

redo_dir: /redo/2

|

||||

zone: zone1

|

||||

obce05:

|

||||

mysql_port: 3881 # External port for OceanBase Database. The default value is 2881.

|

||||

rpc_port: 3882 # Internal port for OceanBase Database. The default value is 2882.

|

||||

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

|

||||

home_path: /home/admin/oceanbase-ce2

|

||||

# The directory for data storage. The default value is $home_path/store.

|

||||

data_dir: /data/2

|

||||

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

|

||||

redo_dir: /redo/2

|

||||

zone: zone2

|

||||

obce06:

|

||||

mysql_port: 3881 # External port for OceanBase Database. The default value is 2881.

|

||||

rpc_port: 3882 # Internal port for OceanBase Database. The default value is 2882.

|

||||

# The working directory for OceanBase Database. OceanBase Database is started under this directory. This is a required field.

|

||||

home_path: /home/admin/oceanbase-ce2

|

||||

# The directory for data storage. The default value is $home_path/store.

|

||||

data_dir: /data/2

|

||||

# The directory for clog, ilog, and slog. The default value is the same as the data_dir value.

|

||||

redo_dir: /redo/2

|

||||

zone: zone3

|

||||

obproxy:

|

||||

servers:

|

||||

- 172.20.249.54

|

||||

# Set dependent components for the component.

|

||||

# When the associated configurations are not done, OBD will automatically get the these configurations from the dependent components.

|

||||

depends:

|

||||

- oceanbase-ce

|

||||

global:

|

||||

listen_port: 2883 # External port. The default value is 2883.

|

||||

prometheus_listen_port: 2884 # The Prometheus port. The default value is 2884.

|

||||

home_path: /home/admin/obproxy

|

||||

# oceanbase root server list

|

||||

# format: ip:mysql_port;ip:mysql_port

|

||||

rs_list: 172.20.249.53:2881;172.20.249.55:2881;172.20.249.56:2881

|

||||

enable_cluster_checkout: false

|

||||

# observer cluster name, consistent with oceanbase-ce's appname

|

||||

# cluster_name: obce-3zones

|

||||

obproxy_sys_password: 0MdTv1tm # obproxy sys user password, can be empty

|

||||

# observer_sys_password: uY7Yf8zx # proxyro user pasword, consistent with oceanbase-ce's proxyro_password, can be empty

|

||||

|

||||

```

|

||||

271

docs/docs/junior-training/ob-quick-start/chapter02/2.2.md

Normal file

271

docs/docs/junior-training/ob-quick-start/chapter02/2.2.md

Normal file

@ -0,0 +1,271 @@

|

||||

## 如何快速体验 OceanBase

|

||||

|

||||

在部署 OceanBase 社区版之前,建议您快速通过 Docker 环境看一下一个部署好的 OceanBase 社区版环境。我们提供了一个 OceanBase 社区版 Docker 镜像,您可以在您的笔记本或电脑上使用 Docker 技术快速部署并启动 OceanBase 社区版的 Docker 容器。

|

||||

|

||||

### 机器资源要求

|

||||

|

||||

OceanBase Docker 容器对资源的要求如下:

|

||||

|

||||

+ 机器可用内存不少于 10G 。 注意,是剩余可用内存。

|

||||

+ 机器磁盘目录空间不少于 10G 。少于 10G 后面使用可能会不是很方便。如遭遇空间目录问题。

|

||||

+ CPU 建议至少有 2个 逻辑 CPU 。

|

||||

|

||||

### 安装 Docker

|

||||

|

||||

Docker 是免费软件,在 Windows、Linux、Mac 系统里都可以安装运行。下载和安装地址请参考 : [https://docs.docker.com/get-docker/](https://docs.docker.com/get-docker/) 。

|

||||

|

||||

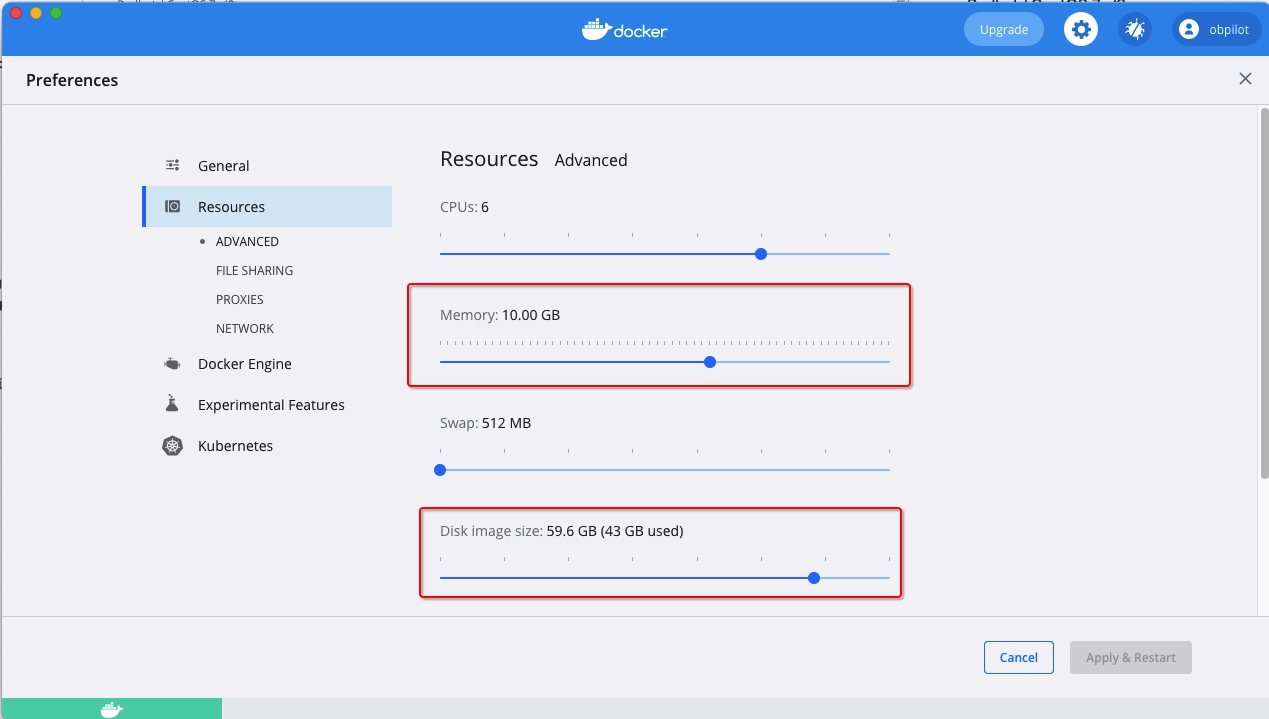

Docker 安装后,对默认的容器资源有限制,这里需要手动调整一下。下面以 Mac电脑上的 Docker 设置为例说明。

|

||||

|

||||

|

||||

|

||||

+ 常用 Docker 命令参考

|

||||

|

||||

```bash

|

||||

# 查看docker版本

|

||||

docker version

|

||||

# 显示docker系统的信息

|

||||

docker info

|

||||

# 日志信息

|

||||

docker logs

|

||||

# 故障检查

|

||||

service docker status

|

||||

# 启动关闭docker

|

||||

service docker start | stop

|

||||

|

||||

# 查看容器日志

|

||||

docker logs -f <容器名orID>

|

||||

|

||||

# 清理命令,危险!!!

|

||||

# 清理不用的容器

|

||||

docker container prune

|

||||

# 清理不用的镜像

|

||||

docker image prune

|

||||

# 清理不用的卷

|

||||

docker volume prune

|

||||

|

||||

```

|

||||

|

||||

### 下载镜像并启动

|

||||

|

||||

OceanBase Docker 镜像地址:[https://hub.docker.com/r/oceanbase/obce-mini](https://hub.docker.com/r/oceanbase/obce-mini) 。

|

||||

镜像的源码地址在 Github 上:[https://github.com/oceanbase/oceanbase/tree/master/tools/docker/mini](https://github.com/oceanbase/oceanbase/tree/master/tools/docker/mini) 。有兴趣的朋友可以直接看看。

|

||||

|

||||

```bash

|

||||

docker search oceanbase # 搜索 oceanbase 相关镜像

|

||||

|

||||

docker pull oceanbase/obce-mini

|

||||

|

||||

```

|

||||

|

||||

启动 OceanBase Docker 容器。

|

||||

|

||||

```bash

|

||||

docker run -p 2881:2881 --name obce-mini -d -e OB_HOME_PATH="/root/obce/" -e OB_TENANT_NAME="obmysql" oceanbase/obce-mini

|

||||

|

||||

输出:

|

||||

➜ ~ docker run -p 2881:2881 --name obce-mini -d -e OB_HOME_PATH="/root/obce/" -e OB_TENANT_NAME="obmysql" oceanbase/obce-mini

|

||||

45180d71f504981ed588b7de0e5abf952511f2c2f9ee5eac0446b6cf0d4dc02c

|

||||

➜ ~ docker ps

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

45180d71f504 oceanbase/obce-mini "/bin/sh -c _boot" 4 seconds ago Up 2 seconds 0.0.0.0:2881->2881/tcp, :::2881->2881/tcp obce-mini

|

||||

➜ ~

|

||||

```

|

||||

|

||||

### 查看容器启动日志

|

||||

|

||||

刚启动的 OceanBase 需要几分钟初始化集群。可以查看容器启动日志。

|

||||

|

||||

```bash

|

||||

docker logs obce-mini

|

||||

|

||||

输出:

|

||||

➜ ~ docker logs obce-mini

|

||||

generate boot.yaml ...

|

||||

create boot dirs and deploy OceanBase cluster ...

|

||||

Package oceanbase-ce-3.1.0 is available.

|

||||

install oceanbase-ce-3.1.0 for local ok

|

||||

+-----------------------------------------------------------------------------+

|

||||

| Packages |

|

||||

+--------------+---------+---------+------------------------------------------+

|

||||

| Repository | Version | Release | Md5 |

|

||||

+--------------+---------+---------+------------------------------------------+

|

||||

| oceanbase-ce | 3.1.0 | 2.el7 | afd11d52f83eef4b456d77969fde620c4bfba85e |

|

||||

+--------------+---------+---------+------------------------------------------+

|

||||

Open ssh connection ok

|

||||

Remote oceanbase-ce-3.1.0-afd11d52f83eef4b456d77969fde620c4bfba85e repository install ok

|

||||

Remote oceanbase-ce-3.1.0-afd11d52f83eef4b456d77969fde620c4bfba85e repository lib check !!

|

||||

[WARN] 127.0.0.1 oceanbase-ce-3.1.0-afd11d52f83eef4b456d77969fde620c4bfba85e require: libaio.so.1

|

||||

[WARN] 127.0.0.1 oceanbase-ce-3.1.0-afd11d52f83eef4b456d77969fde620c4bfba85e require: libmariadb.so.3

|

||||

|

||||

Try to get lib-repository

|

||||

Package oceanbase-ce-libs-3.1.0 is available.

|

||||

install oceanbase-ce-libs-3.1.0 for local ok

|

||||

Use oceanbase-ce-libs-3.1.0-47300ca1ac4c62493caf3e9235b105e242e533b5 for oceanbase-ce-3.1.0-afd11d52f83eef4b456d77969fde620c4bfba85e

|

||||

Remote oceanbase-ce-libs-3.1.0-47300ca1ac4c62493caf3e9235b105e242e533b5 repository install ok

|

||||

Remote oceanbase-ce-3.1.0-afd11d52f83eef4b456d77969fde620c4bfba85e repository lib check ok

|

||||

Cluster status check ok

|

||||

127.0.0.1 initializes cluster work home

|

||||

mini-ce deployed

|

||||

start OceanBase cluster ...

|

||||

Get local repositories and plugins ok

|

||||

Open ssh connection ok

|

||||

Cluster param config check ok

|

||||