Move the docs folder

This commit is contained in:

committed by

LINxiansheng

LINxiansheng

parent

7c6dcc6712

commit

d42f317422

@ -0,0 +1,23 @@

|

||||

SQL 请求执行流程

|

||||

===============================

|

||||

|

||||

|

||||

|

||||

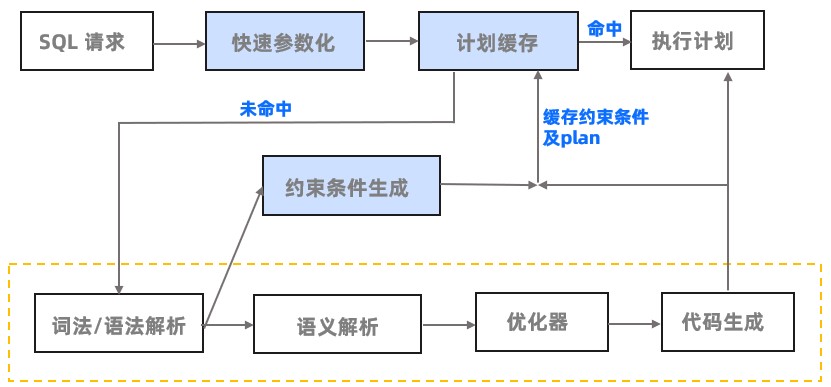

SQL 引擎从接受 SQL 请求到执行的典型流程如下图所示:

|

||||

|

||||

|

||||

|

||||

下表为 SQL 请求执行流程的步骤说明。

|

||||

|

||||

|

||||

| **步骤** | **说明** |

|

||||

|-----------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|

||||

| Parser(词法/语法解析模块) | 在收到用户发送的 SQL 请求串后,Parser 会将字符串分成一个个的"单词",并根据预先设定好的语法规则解析整个请求,将 SQL 请求字符串转换成带有语法结构信息的内存数据结构,称为语法树(Syntax Tree)。 |

|

||||

| Plan Cache(执行计划缓存模块) | 执行计划缓存模块会将该 SQL 第一次生成的执行计划缓存在内存中,后续的执行可以反复执行这个计划,避免了重复查询优化的过程。 |

|

||||

| Resolver(语义解析模块) | Resolver 将生成的语法树转换为带有数据库语义信息的内部数据结构。在这一过程中,Resolver 将根据数据库元信息将 SQL 请求中的 Token 翻译成对应的对象(例如库、表、列、索引等),生成的数据结构叫做 Statement Tree。 |

|

||||

| Transfomer(逻辑改写模块) | 分析用户 SQL 的语义,并根据内部的规则或代价模型,将用户 SQL 改写为与之等价的其他形式,并将其提供给后续的优化器做进一步的优化。Transformer 的工作方式是在原 Statement Tree 上做等价变换,变换的结果仍然是一棵 Statement Tree。 |

|

||||

| Optimizer(优化器) | 优化器是整个 SQL 请求优化的核心,其作用是为 SQL 请求生成最佳的执行计划。在优化过程中,优化器需要综合考虑 SQL 请求的语义、对象数据特征、对象物理分布等多方面因素,解决访问路径选择、联接顺序选择、联接算法选择、分布式计划生成等多个核心问题,最终选择一个对应该 SQL 的最佳执行计划。 |

|

||||

| Code Generator(代码生成器) | 将执行计划转换为可执行的代码,但是不做任何优化选择。 |

|

||||

| Executor(执行器) | 启动 SQL 的执行过程。 * 对于本地执行计划,Executor 会简单的从执行计划的顶端的算子开始调用,根据算子自身的逻辑完成整个执行的过程,并返回执行结果。 * 对于远程或分布式计划,将执行树分成多个可以调度的子计划,并通过 RPC 将其发送给相关的节点去执行。 |

|

||||

|

||||

|

||||

@ -0,0 +1,190 @@

|

||||

SQL 执行计划简介

|

||||

===============================

|

||||

|

||||

执行计划(EXPLAIN)是对一条 SQL 查询语句在数据库中执行过程的描述。

|

||||

|

||||

用户可以通过 `EXPLAIN` 命令查看优化器针对给定 SQL 生成的逻辑执行计划。如果要分析某条 SQL 的性能问题,通常需要先查看 SQL 的执行计划,排查每一步 SQL 执行是否存在问题。所以读懂执行计划是 SQL 优化的先决条件,而了解执行计划的算子是理解 `EXPLAIN` 命令的关键。

|

||||

|

||||

EXPLAIN 命令格式

|

||||

---------------------------------

|

||||

|

||||

OceanBase 数据库的执行计划命令有三种模式:`EXPLAIN BASIC`、`EXPLAIN` 和 `EXPLAIN EXTENDED`。这三种模式对执行计划展现不同粒度的细节信息:

|

||||

|

||||

* `EXPLAIN BASIC` 命令用于最基本的计划展示。

|

||||

|

||||

|

||||

|

||||

* `EXPLAIN EXTENDED` 命令用于最详细的计划展示(通常在排查问题时使用这种展示模式)。

|

||||

|

||||

|

||||

|

||||

* `EXPLAIN` 命令所展示的信息可以帮助普通用户了解整个计划的执行方式。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

命令格式如下:

|

||||

|

||||

```sql

|

||||

EXPLAIN [BASIC | EXTENDED | PARTITIONS | FORMAT = format_name] explainable_stmt

|

||||

format_name: { TRADITIONAL | JSON }

|

||||

explainable_stmt: { SELECT statement

|

||||

| DELETE statement

|

||||

| INSERT statement

|

||||

| REPLACE statement

|

||||

| UPDATE statement }

|

||||

```

|

||||

|

||||

|

||||

|

||||

执行计划形状与算子信息

|

||||

--------------------------------

|

||||

|

||||

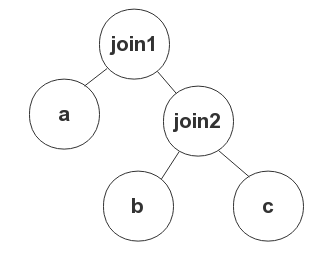

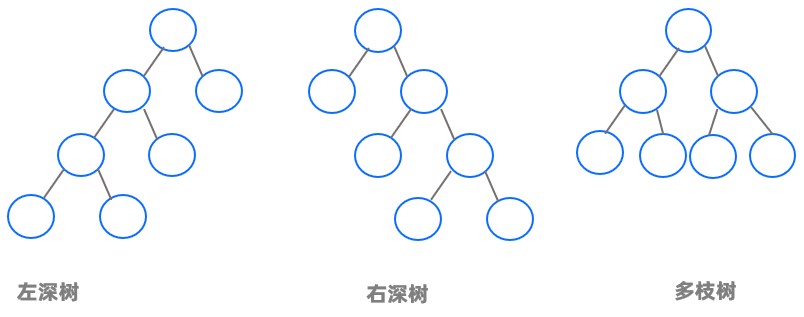

在数据库系统中,执行计划在内部通常是以树的形式来表示的,但是不同的数据库会选择不同的方式展示给用户。

|

||||

|

||||

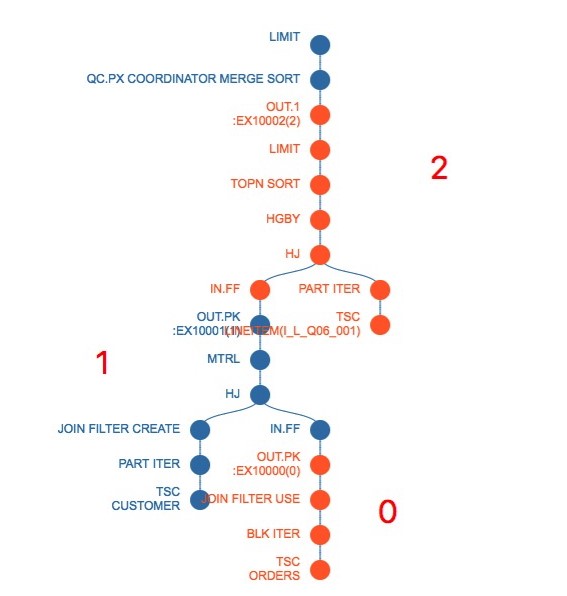

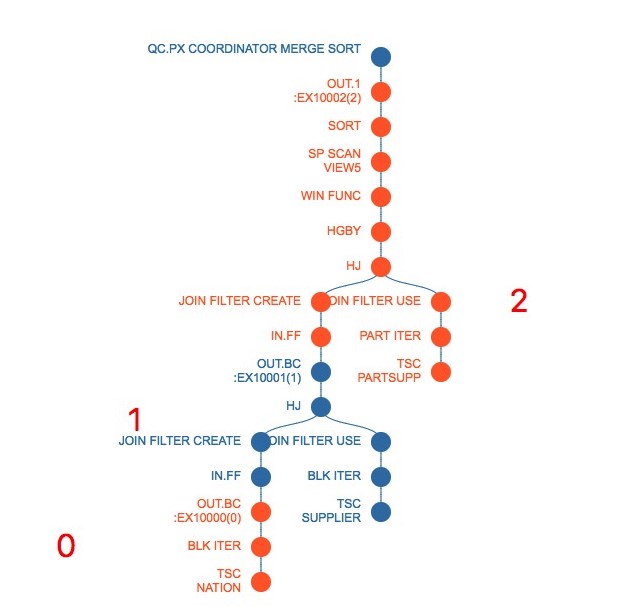

如下示例分别为 PostgreSQL 数据库、Oracle 数据库和 OceanBase 数据库对于 TPCDS Q3 的计划展示。

|

||||

|

||||

```sql

|

||||

obclient>SELECT /*TPC-DS Q3*/ *

|

||||

FROM (SELECT dt.d_year,

|

||||

item.i_brand_id brand_id,

|

||||

item.i_brand brand,

|

||||

Sum(ss_net_profit) sum_agg

|

||||

FROM date_dim dt,

|

||||

store_sales,

|

||||

item

|

||||

WHERE dt.d_date_sk = store_sales.ss_sold_date_sk

|

||||

AND store_sales.ss_item_sk = item.i_item_sk

|

||||

AND item.i_manufact_id = 914

|

||||

AND dt.d_moy = 11

|

||||

GROUP BY dt.d_year,

|

||||

item.i_brand,

|

||||

item.i_brand_id

|

||||

ORDER BY dt.d_year,

|

||||

sum_agg DESC,

|

||||

brand_id)

|

||||

WHERE rownum <= 100;

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

* PostgreSQL 数据库执行计划展示如下:

|

||||

|

||||

```sql

|

||||

Limit (cost=13986.86..13987.20 rows=27 width=91)

|

||||

-> Sort (cost=13986.86..13986.93 rows=27 width=65)

|

||||

Sort Key: dt.d_year, (sum(store_sales.ss_net_profit)), item.i_brand_id

|

||||

-> HashAggregate (cost=13985.95..13986.22 rows=27 width=65)

|

||||

-> Merge Join (cost=13884.21..13983.91 rows=204 width=65)

|

||||

Merge Cond: (dt.d_date_sk = store_sales.ss_sold_date_sk)

|

||||

-> Index Scan using date_dim_pkey on date_dim dt (cost=0.00..3494.62 rows=6080 width=8)

|

||||

Filter: (d_moy = 11)

|

||||

-> Sort (cost=12170.87..12177.27 rows=2560 width=65)

|

||||

Sort Key: store_sales.ss_sold_date_sk

|

||||

-> Nested Loop (cost=6.02..12025.94 rows=2560 width=65)

|

||||

-> Seq Scan on item (cost=0.00..1455.00 rows=16 width=59)

|

||||

Filter: (i_manufact_id = 914)

|

||||

-> Bitmap Heap Scan on store_sales (cost=6.02..658.94 rows=174 width=14)

|

||||

Recheck Cond: (ss_item_sk = item.i_item_sk)

|

||||

-> Bitmap Index Scan on store_sales_pkey (cost=0.00..5.97 rows=174 width=0)

|

||||

Index Cond: (ss_item_sk = item.i_item_sk)

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

* Oracle 数据库执行计划展示如下:

|

||||

|

||||

```sql

|

||||

Plan hash value: 2331821367

|

||||

--------------------------------------------------------------------------------------------------

|

||||

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

|

||||

--------------------------------------------------------------------------------------------------

|

||||

| 0 | SELECT STATEMENT | | 100 | 9100 | 3688 (1)| 00:00:01 |

|

||||

|* 1 | COUNT STOPKEY | | | | | |

|

||||

| 2 | VIEW | | 2736 | 243K| 3688 (1)| 00:00:01 |

|

||||

|* 3 | SORT ORDER BY STOPKEY | | 2736 | 256K| 3688 (1)| 00:00:01 |

|

||||

| 4 | HASH GROUP BY | | 2736 | 256K| 3688 (1)| 00:00:01 |

|

||||

|* 5 | HASH JOIN | | 2736 | 256K| 3686 (1)| 00:00:01 |

|

||||

|* 6 | TABLE ACCESS FULL | DATE_DIM | 6087 | 79131 | 376 (1)| 00:00:01 |

|

||||

| 7 | NESTED LOOPS | | 2865 | 232K| 3310 (1)| 00:00:01 |

|

||||

| 8 | NESTED LOOPS | | 2865 | 232K| 3310 (1)| 00:00:01 |

|

||||

|* 9 | TABLE ACCESS FULL | ITEM | 18 | 1188 | 375 (0)| 00:00:01 |

|

||||

|* 10 | INDEX RANGE SCAN | SYS_C0010069 | 159 | | 2 (0)| 00:00:01 |

|

||||

| 11 | TABLE ACCESS BY INDEX ROWID| STORE_SALES | 159 | 2703 | 163 (0)| 00:00:01 |

|

||||

--------------------------------------------------------------------------------------------------

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

<!-- -->

|

||||

|

||||

* OceanBase 数据库执行计划展示如下:

|

||||

|

||||

```sql

|

||||

|ID|OPERATOR |NAME |EST. ROWS|COST |

|

||||

-------------------------------------------------------

|

||||

|0 |LIMIT | |100 |81141|

|

||||

|1 | TOP-N SORT | |100 |81127|

|

||||

|2 | HASH GROUP BY | |2924 |68551|

|

||||

|3 | HASH JOIN | |2924 |65004|

|

||||

|4 | SUBPLAN SCAN |VIEW1 |2953 |19070|

|

||||

|5 | HASH GROUP BY | |2953 |18662|

|

||||

|6 | NESTED-LOOP JOIN| |2953 |15080|

|

||||

|7 | TABLE SCAN |ITEM |19 |11841|

|

||||

|8 | TABLE SCAN |STORE_SALES|161 |73 |

|

||||

|9 | TABLE SCAN |DT |6088 |29401|

|

||||

=======================================================

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

由示例可见,OceanBase 数据库的计划展示与 Oracle 数据库类似。OceanBase 数据库执行计划中的各列的含义如下:

|

||||

|

||||

|

||||

| 列名 | 含义 |

|

||||

|-----------|----------------------------|

|

||||

| ID | 执行树按照前序遍历的方式得到的编号(从 0 开始)。 |

|

||||

| OPERATOR | 操作算子的名称。 |

|

||||

| NAME | 对应表操作的表名(索引名)。 |

|

||||

| EST. ROWS | 估算该操作算子的输出行数。 |

|

||||

| COST | 该操作算子的执行代价(微秒)。 |

|

||||

|

||||

|

||||

**说明**

|

||||

|

||||

|

||||

|

||||

在表操作中,NAME 字段会显示该操作涉及的表的名称(别名),如果是使用索引访问,还会在名称后的括号中展示该索引的名称, 例如 t1(t1_c2) 表示使用了 t1_c2 这个索引。如果扫描的顺序是逆序,还会在后面使用 RESERVE 关键字标识,例如 `t1(t1_c2,RESERVE)`。

|

||||

|

||||

OceanBase 数据库 `EXPLAIN` 命令输出的第一部分是执行计划的树形结构展示。其中每一个操作在树中的层次通过其在 operator 中的缩进予以展示。树的层次关系用缩进来表示,层次最深的优先执行,层次相同的以特定算子的执行顺序为标准来执行。

|

||||

|

||||

上述 TPCDS Q3 示例的计划展示树如下:

|

||||

|

||||

OceanBase 数据库 `EXPLAIN` 命令输出的第二部分是各操作算子的详细信息,包括输出表达式、过滤条件、分区信息以及各算子的独有信息(包括排序键、连接键、下压条件等)。示例如下:

|

||||

|

||||

```unknow

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([t1.c1], [t1.c2], [t2.c1], [t2.c2]), filter(nil), sort_keys([t1.c1, ASC], [t1.c2, ASC]), prefix_pos(1)

|

||||

1 - output([t1.c1], [t1.c2], [t2.c1], [t2.c2]), filter(nil),

|

||||

equal_conds([t1.c1 = t2.c2]), other_conds(nil)

|

||||

2 - output([t2.c1], [t2.c2]), filter(nil), sort_keys([t2.c2, ASC])

|

||||

3 - output([t2.c2], [t2.c1]), filter(nil),

|

||||

access([t2.c2], [t2.c1]), partitions(p0)

|

||||

4 - output([t1.c1], [t1.c2]), filter(nil),

|

||||

access([t1.c1], [t1.c2]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

@ -0,0 +1,69 @@

|

||||

TABLE SCAN

|

||||

===============================

|

||||

|

||||

TABLE SCAN 算子是存储层和 SQL 层的接口,用于展示优化器选择哪个索引来访问数据。

|

||||

|

||||

在 OceanBase 数据库中,对于普通索引,索引的回表逻辑是封装在 TABLE SCAN 算子中的;而对于全局索引,索引的回表逻辑由 TABLE LOOKUP 算子完成。

|

||||

|

||||

示例:含 TABLE SCAN 算子的执行计划

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT PRIMARY KEY, c2 INT, c3 INT, c4 INT,

|

||||

INDEX k1(c2,c3));

|

||||

Query OK, 0 rows affected (0.09 sec)

|

||||

|

||||

Q1:

|

||||

obclient>EXPLAIN EXTENDED SELECT * FROM t1 WHERE c1 = 1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

| ==================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

----------------------------------

|

||||

|0 |TABLE GET|t1 |1 |53 |

|

||||

==================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([t1.c1(0x7f22fbe69340)], [t1.c2(0x7f22fbe695c0)], [t1.c3(0x7f22fbe69840)], [t1.c4(0x7f22fbe69ac0)]), filter(nil),

|

||||

access([t1.c1(0x7f22fbe69340)], [t1.c2(0x7f22fbe695c0)], [t1.c3(0x7f22fbe69840)], [t1.c4(0x7f22fbe69ac0)]), partitions(p0),

|

||||

is_index_back=false,

|

||||

range_key([t1.c1(0x7f22fbe69340)]), range[1 ; 1],

|

||||

range_cond([t1.c1(0x7f22fbe69340) = 1(0x7f22fbe68cf0)])

|

||||

|

||||

Q2:

|

||||

obclient>EXPLAIN EXTENDED SELECT * FROM t1 WHERE c2 < 1 AND c3 < 1 AND

|

||||

c4 < 1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

| ======================================

|

||||

|ID|OPERATOR |NAME |EST. ROWS|COST |

|

||||

--------------------------------------

|

||||

|0 |TABLE SCAN|t1(k1)|100 |12422|

|

||||

======================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([t1.c1(0x7f22fbd1e220)], [t1.c2(0x7f227decec40)], [t1.c3(0x7f227decf9b0)], [t1.c4(0x7f22fbd1dfa0)]), filter([t1.c3(0x7f227decf9b0) < 1(0x7f227decf360)], [t1.c4(0x7f22fbd1dfa0) < 1(0x7f22fbd1d950)]),

|

||||

access([t1.c2(0x7f227decec40)], [t1.c3(0x7f227decf9b0)], [t1.c4(0x7f22fbd1dfa0)], [t1.c1(0x7f22fbd1e220)]), partitions(p0),

|

||||

is_index_back=true, filter_before_indexback[true,false],

|

||||

range_key([t1.c2(0x7f227decec40)], [t1.c3(0x7f227decf9b0)], [t1.c1(0x7f22fbd1e220)]),

|

||||

range(NULL,MAX,MAX ; 1,MIN,MIN),

|

||||

range_cond([t1.c2(0x7f227decec40) < 1(0x7f227dece5f0)])

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,执行计划展示中的 outputs \& filters 详细展示了 TABLE SCAN 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|---------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|

||||

| operator | TABLE SCAN 算子的 operator 有两种形式:TABLE SCAN 和 TABLE GET。 * TABLE SCAN:属于范围扫描,会返回 0 行或者多行数据。 * TABLE GET:直接用主键定位,返回 0 行或者 1 行数据。 |

|

||||

| name | 选择用哪个索引来访问数据。选择的索引的名字会跟在表名后面,如果没有索引的名字,则说明执行的是主表扫描。 这里需要注意,在 OceanBase 数据库中,主表和索引的组织结构是一样的,主表本身也是一个索引。 |

|

||||

| output | 该算子的输出列。 |

|

||||

| filter | 该算子的过滤谓词。 由于示例中 TABLE SCAN 算子没有设置 filter,所以为 nil。 |

|

||||

| partitions | 查询需要扫描的分区。 |

|

||||

| is_index_back | 该算子是否需要回表。 例如,在 Q1 查询中,因为选择了主表,所以不需要回表。在 Q2 查询中,索引列是 `(c2,c3,c1)`, 由于查询需要返回 c4 列,所以需要回表。 |

|

||||

| filter_before_indexback | 与每个 filter 对应,表明该 filter 是可以直接在索引上进行计算,还是需要索引回表之后才能计算。 例如,在 Q2 查询中,filter `c3 < 1` 可以直接在索引上计算,能减少回表数量;filter `c4 < 1` 需要回表取出 c4 列之后才能计算。 |

|

||||

| range_key/range/range_cond | * range_key:索引的 rowkey 列。 <!-- --> * range:索引开始扫描和结束扫描的位置。判断是否是全表扫描需要关注 range 的范围。例如,对于一个 rowkey 有三列的场景,`range(MIN,MIN, MIN ; MAX, MAX, MAX)`代表的就是真正意义上的全表扫描。 * range_cond:决定索引开始扫描和结束扫描位置的相关谓词。 |

|

||||

|

||||

|

||||

@ -0,0 +1,50 @@

|

||||

MATERIAL

|

||||

=============================

|

||||

|

||||

MATERIAL 算子用于物化下层算子输出的数据。

|

||||

|

||||

OceanBase 数据库以流式数据执行计划,但有时算子需要等待下层算子输出所有数据后才能够开始执行,所以需要在下方添加一个 MATERIAL 算子物化所有的数据。或者在子计划需要重复执行的时候,使用 MATERIAL 算子可以避免重复执行。

|

||||

|

||||

如下示例中,t1 表与 t2 表执行 NESTED LOOP JOIN 运算时,右表需要重复扫描,可以在右表有一个 MATERIAL 算子,保存 t2 表的所有数据。

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT, c2 INT, c3 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE TABLE t2(c1 INT ,c2 INT ,c3 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>EXPLAIN SELECT /*+ORDERED USE_NL(T2)*/* FROM t1,t2

|

||||

WHERE t1.c1=t2.c1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

===========================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST |

|

||||

-------------------------------------------

|

||||

|0 |NESTED-LOOP JOIN| |2970 |277377|

|

||||

|1 | TABLE SCAN |t1 |3 |37 |

|

||||

|2 | MATERIAL | |100000 |176342|

|

||||

|3 | TABLE SCAN |t2 |100000 |70683 |

|

||||

===========================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([t1.c1], [t1.c2], [t1.c3], [t2.c1], [t2.c2], [t2.c3]), filter(nil),

|

||||

conds([t1.c1 = t2.c1]), nl_params_(nil)

|

||||

1 - output([t1.c1], [t1.c2], [t1.c3]), filter(nil),

|

||||

access([t1.c1], [t1.c2], [t1.c3]), partitions(p0)

|

||||

2 - output([t2.c1], [t2.c2], [t2.c3]), filter(nil)

|

||||

3 - output([t2.c1], [t2.c2], [t2.c3]), filter(nil),

|

||||

access([t2.c1], [t2.c2], [t2.c3]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,执行计划展示中 2 号算子 MATERIAL 的功能是保存 t2 表的数据,以避免每次联接都从磁盘扫描 t2 表的数据。执行计划展示中的 outputs \& filters 详细展示了 MATERIAL 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|----------|------------------------------------------------------------------|

|

||||

| output | 该算子输出的表达式。 其中 rownum() 表示 ROWNUM 对应的表达式。 |

|

||||

| filter | 该算子上的过滤条件。 由于示例中 MATERIAL 算子没有设置 filter,所以为 nil。 |

|

||||

|

||||

|

||||

@ -0,0 +1,42 @@

|

||||

SORT

|

||||

=========================

|

||||

|

||||

SORT 算子用于对输入的数据进行排序。

|

||||

|

||||

示例:对 t1 表的数据排序,并按照 c1 列降序排列和 c2 列升序排列

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE TABLE t2(c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>EXPLAIN SELECT c1 FROM t1 ORDER BY c1 DESC, c2 ASC\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

====================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

------------------------------------

|

||||

|0 |SORT | |3 |40 |

|

||||

|1 | TABLE SCAN|t1 |3 |37 |

|

||||

====================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([t1.c1]), filter(nil), sort_keys([t1.c1, DESC], [t1.c2, ASC])

|

||||

1 - output([t1.c1], [t1.c2]), filter(nil),

|

||||

access([t1.c1], [t1.c2]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,执行计划展示中 0 号算子 SORT 对 t1 表的数据进行排序,执行计划展示中的 outputs \& filters 详细展示了 SORT 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|-------------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|

||||

| output | 该算子的输出列。 |

|

||||

| filter | 该算子的过滤谓词。 由于示例中 SORT 算子没有设置 filter,所以为 nil。 |

|

||||

| sort_keys(\[column, DESC\],\[column, ASC\] ...) | 按 column 列排序。 * DESC:降序。 * ASC:升序。 例如,`sort_keys([t1.c1, DESC],[t1.c2, ASC])`中指定排序键分别为 c1 和 c2,并且以 c1 列降序, c2 列升序排列。 |

|

||||

|

||||

|

||||

@ -0,0 +1,226 @@

|

||||

LIMIT

|

||||

==========================

|

||||

|

||||

LIMIT 算子用于限制数据输出的行数,与 MySQL 的 LIMIT 算子功能相同。

|

||||

|

||||

在 OceanBase 数据库的 MySQL 模式中处理含有 LIMIT 的 SQL 时,SQL 优化器都会为其生成一个 LIMIT 算子,但在一些特殊场景不会给与分配,例如 LIMIT 可以下压到基表的场景,就没有分配的必要性。

|

||||

|

||||

而对于 OceanBase 数据库的 Oracle 模式,以下两种场景会为其分配 LIMIT 算子:

|

||||

|

||||

* ROWNUM 经过 SQL 优化器改写生成

|

||||

|

||||

|

||||

|

||||

* 为了兼容 Oracle12c 的 FETCH 功能

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

MySQL 模式含有 LIMIT 的 SQL 场景

|

||||

----------------------------------------------

|

||||

|

||||

示例 1:OceanBase 数据库的 MySQL 模式含有 LIMIT 的 SQL 场景

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE TABLE t2(c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(1, 1);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(2, 2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(3, 3);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t2 VALUES(1, 1);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t2 VALUES(2, 2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t2 VALUES(3, 3);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

Q1:

|

||||

obclient>EXPLAIN SELECT t1.c1 FROM t1,t2 LIMIT 1 OFFSET 1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

| =====================================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST |

|

||||

-----------------------------------------------------

|

||||

|0 |LIMIT | |1 |39 |

|

||||

|1 | NESTED-LOOP JOIN CARTESIAN| |2 |39 |

|

||||

|2 | TABLE SCAN |t1 |1 |36 |

|

||||

|3 | TABLE SCAN |t2 |100000 |59654|

|

||||

=====================================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([t1.c1]), filter(nil), limit(1), offset(1)

|

||||

1 - output([t1.c1]), filter(nil),

|

||||

conds(nil), nl_params_(nil)

|

||||

2 - output([t1.c1]), filter(nil),

|

||||

access([t1.c1]), partitions(p0)

|

||||

3 - output([t2.__pk_increment]), filter(nil),

|

||||

access([t2.__pk_increment]), partitions(p0)

|

||||

|

||||

Q2:

|

||||

obclient>EXPLAIN SELECT * FROM t1 LIMIT 2\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

| ===================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

-----------------------------------

|

||||

|0 |TABLE SCAN|t1 |2 |37 |

|

||||

===================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([t1.c1], [t1.c2]), filter(nil),

|

||||

access([t1.c1], [t1.c2]), partitions(p0),

|

||||

limit(2), offset(nil)

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,Q1 查询的执行计划展示中的 outputs \& filters 详细列出了 LIMIT 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|----------|--------------------------------------------------------------------------|

|

||||

| output | 该算子输出的表达式。 |

|

||||

| filter | 该算子上的过滤条件。 由于示例中 LIMIT 算子没有设置 filter,所以为 nil。 |

|

||||

| limit | 限制输出的行数,是一个常量。 |

|

||||

| offset | 距离当前位置的偏移行数,是一个常量。 由于示例中的 SQL 中不含有 offset,因此生成的计划中为 nil。 |

|

||||

|

||||

|

||||

|

||||

Q2 查询的执行计划展示中,虽然 SQL 中含有 LIMIT,但是并未分配 LIMIT 算子,而是将相关表达式下压到了 TABLE SCAN 算子上,这种下压 LIMIT 行为是 SQL 优化器的一种优化方式,详细信息请参见 [TABLE SCAN](../../../12.sql-optimization-guide-1/2.sql-execution-plan-3/2.execution-plan-operator-2/1.table-scan-2.md)。

|

||||

|

||||

Oracle 模式含有 COUNT 的 SQL 改写为 LIMIT 场景

|

||||

---------------------------------------------------------

|

||||

|

||||

由于 Oracle 模式含有 COUNT 的 SQL 改写为 LIMIT 场景在 COUNT 算子章节已经有过相关介绍,详细信息请参见 [COUNT](../../../12.sql-optimization-guide-1/2.sql-execution-plan-3/2.execution-plan-operator-2/4.COUNT-1-2-3-4.md)。

|

||||

|

||||

Oracle 模式含有 FETCH 的 SQL 场景

|

||||

-----------------------------------------------

|

||||

|

||||

示例 2:OceanBase 数据库的 Oracle 模式含有 FETCH 的 SQL 场景

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE T1(c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE TABLE T1(c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(1, 1);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(2, 2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(3, 3);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t2 VALUES(1, 1);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t2 VALUES(2, 2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t2 VALUES(3, 3);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

Q3:

|

||||

obclient>EXPLAIN SELECT * FROM t1,t2 OFFSET 1 ROWS

|

||||

FETCH NEXT 1 ROWS ONLY\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

| =====================================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST |

|

||||

-----------------------------------------------------

|

||||

|0 |LIMIT | |1 |238670 |

|

||||

|1 | NESTED-LOOP JOIN CARTESIAN| |2 |238669 |

|

||||

|2 | TABLE SCAN |T1 |1 |36 |

|

||||

|3 | MATERIAL | |100000 |238632 |

|

||||

|4 | TABLE SCAN |T2 |100000 |64066|

|

||||

=====================================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([T1.C1], [T1.C2], [T2.C1], [T2.C2]), filter(nil), limit(?), offset(?)

|

||||

1 - output([T1.C1], [T1.C2], [T2.C1], [T2.C2]), filter(nil),

|

||||

conds(nil), nl_params_(nil)

|

||||

2 - output([T1.C1], [T1.C2]), filter(nil),

|

||||

access([T1.C1], [T1.C2]), partitions(p0)

|

||||

3 - output([T2.C1], [T2.C2]), filter(nil)

|

||||

4 - output([T2.C1], [T2.C2]), filter(nil),

|

||||

access([T2.C1], [T2.C2]), partitions(p0)

|

||||

|

||||

|

||||

Q4:

|

||||

obclient>EXPLAIN SELECT * FROM t1 FETCH NEXT 1 ROWS ONLY\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

| ===================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

-----------------------------------

|

||||

|0 |TABLE SCAN|T1 |1 |37 |

|

||||

===================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([T1.C1], [T1.C2]), filter(nil),

|

||||

access([T1.C1], [T1.C2]), partitions(p0),

|

||||

limit(?), offset(nil)

|

||||

|

||||

|

||||

Q5:

|

||||

obclient>EXPLAIN SELECT * FROM t2 ORDER BY c1 FETCH NEXT 10

|

||||

PERCENT ROW WITH TIES\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

| =======================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST |

|

||||

---------------------------------------

|

||||

|0 |LIMIT | |10000 |573070|

|

||||

|1 | SORT | |100000 |559268|

|

||||

|2 | TABLE SCAN|T2 |100000 |64066 |

|

||||

=======================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([T2.C1], [T2.C2]), filter(nil), limit(nil), offset(nil), percent(?), with_ties(true)

|

||||

1 - output([T2.C1], [T2.C2]), filter(nil), sort_keys([T2.C1, ASC])

|

||||

2 - output([T2.C1], [T2.C2]), filter(nil),

|

||||

access([T2.C1], [T2.C2]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,Q3 和 Q4 的查询的执行计划展示中,与之前 MySQL 模式的 Q1 和 Q2 查询基本相同,这是因为 Oracle 12c 的 FETCH 功能和 MySQL 的 LIMIT 功能类似,两者的区别如 Q5 执行计划展示中所示。

|

||||

|

||||

执行计划展示中的 outputs \& filters 详细列出了 LIMIT 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|-----------|-------------------------------------------------------------------------------------------------|

|

||||

| output | 该算子输出的表达式。 |

|

||||

| filter | 该算子上的过滤条件。 由于示例中 LIMIT 算子没有设置 filter,所以为 nil。 |

|

||||

| limit | 限制输出的行数,是一个常量。 |

|

||||

| offset | 距离当前位置的偏移行数,是一个常量。 |

|

||||

| percent | 按照数据总行数的百分比输出,是一个常量。 |

|

||||

| with_ties | 是否在排序后的将最后一行按照等值一起输出。 例如,要求输出最后一行,但是排序之后有两行的值都为 1,如果设置了最后一行按照等值一起输出,那么这两行都会被输出。 |

|

||||

|

||||

|

||||

|

||||

以上 LIMIT 算子的新增的计划展示属性,都是在 Oracle 模式下的 FETCH 功能特有的,不影响 MySQL 模式计划。关于 Oracle12c 的 FETCH 语法的详细信息,请参见 [Oracle 12c Fetch Rows](https://renenyffenegger.ch/notes/development/databases/Oracle/SQL/select/first-n-rows/index#ora-sql-row-limiting-clause)。

|

||||

@ -0,0 +1,135 @@

|

||||

FOR UPDATE

|

||||

===============================

|

||||

|

||||

FOR UPDATE 算子用于对表中的数据进行加锁操作。

|

||||

|

||||

OceanBase 数据库支持的 FOR UPDATE 算子包括 FOR UPDATE 和 MULTI FOR UPDATE。

|

||||

|

||||

FOR UPDATE 算子执行查询的一般流程如下:

|

||||

|

||||

1. 首先执行 `SELECT` 语句部分,获得查询结果集。

|

||||

|

||||

|

||||

|

||||

2. 对查询结果集相关的记录进行加锁操作。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

FOR UPDATE

|

||||

-------------------------------

|

||||

|

||||

FOR UPDATE 用于对单表(或者单个分区)进行加锁。

|

||||

|

||||

如下示例中,Q1 查询是对 t1 表中满足 `c1 = 1` 的行进行加锁。这里 t1 表是一张单分区的表,所以 1 号算子生成了一个 FOR UPDATE 算子。

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE TABLE t2(c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(1, 1);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(2, 2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(3, 3);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t2 VALUES(1, 1);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t2 VALUES(2, 2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t2 VALUES(3, 3);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

Q1:

|

||||

obclient> EXPLAIN SELECT * FROM t1 WHERE c1 = 1 FOR UPDATE\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

=====================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

-------------------------------------

|

||||

|0 |MATERIAL | |10 |856 |

|

||||

|1 | FOR UPDATE | |10 |836 |

|

||||

|2 | TABLE SCAN|T1 |10 |836 |

|

||||

=====================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([T1.C1], [T1.C2]), filter(nil)

|

||||

1 - output([T1.C1], [T1.C2]), filter(nil), lock tables(T1)

|

||||

2 - output([T1.C1], [T1.C2], [T1.__pk_increment]), filter([T1.C1 = 1]),

|

||||

access([T1.C1], [T1.C2], [T1.__pk_increment]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,Q1 查询的执行计划展示中的 outputs \& filters 详细列出了 FOR UPDATE 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|-------------|--------------------------------------------------------------------|

|

||||

| output | 该算子输出的表达式。 |

|

||||

| filter | 该算子上的过滤条件。 由于示例中 FOR UPDATE 算子没有设置 filter,所以为 nil。 |

|

||||

| lock tables | 需要加锁的表。 |

|

||||

|

||||

|

||||

|

||||

MULTI FOR UPDATE

|

||||

-------------------------------------

|

||||

|

||||

MULTI FOR UPDATE 用于对多表(或者多个分区)进行加锁操作。

|

||||

|

||||

如下示例中,Q2 查询是对 t1 和 t2 两张表的数据进行加锁,加锁对象是满足 `c1 = 1 AND c1 = d1` 的行。由于需要对多个表的行进行加锁,因此 1 号算子是 MULTI FOR UPDATE。

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1 (c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE TABLE t2 (d1 INT, d2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>EXPLAIN SELECT * FROM t1, t2 WHERE c1 = 1 AND c1 = d1

|

||||

FOR UPDATE\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

=====================================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

-----------------------------------------------------

|

||||

|0 |MATERIAL | |10 |931 |

|

||||

|1 | MULTI FOR UPDATE | |10 |895 |

|

||||

|2 | NESTED-LOOP JOIN CARTESIAN| |10 |895 |

|

||||

|3 | TABLE GET |T2 |1 |52 |

|

||||

|4 | TABLE SCAN |T1 |10 |836 |

|

||||

=====================================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([T1.C1], [T1.C2], [T2.D1], [T2.D2]), filter(nil)

|

||||

1 - output([T1.C1], [T1.C2], [T2.D1], [T2.D2]), filter(nil), lock tables(T1, T2)

|

||||

2 - output([T1.C1], [T1.C2], [T2.D1], [T2.D2], [T1.__pk_increment]), filter(nil),

|

||||

conds(nil), nl_params_(nil)

|

||||

3 - output([T2.D1], [T2.D2]), filter(nil),

|

||||

access([T2.D1], [T2.D2]), partitions(p0)

|

||||

4 - output([T1.C1], [T1.C2], [T1.__pk_increment]), filter([T1.C1 = 1]),

|

||||

access([T1.C1], [T1.C2], [T1.__pk_increment]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,Q2 查询的执行计划展示中的 outputs \& filters 详细列出了 MULTI FOR UPDATE 算子的信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|-------------|--------------------------------------------------------------------------|

|

||||

| output | 该算子输出的列。 |

|

||||

| filter | 该算子上的过滤条件。 由于示例中 MULTI FOR UPDATE 算子没有设置 filter,所以为 nil。 |

|

||||

| lock tables | 需要加锁的表。 |

|

||||

|

||||

|

||||

@ -0,0 +1,47 @@

|

||||

SELECT INTO

|

||||

================================

|

||||

|

||||

SELECT INTO 算子用于将查询结果赋值给变量列表,查询仅返回一行数据。

|

||||

|

||||

如下示例查询中, `SELECT` 输出列为 `COUNT(*)` 和 `MAX(c1)`,其查询结果分别赋值给变量 @a 和 @b。

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(1,1);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(2,2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>EXPLAIN SELECT COUNT(*), MAX(c1) INTO @a, @b FROM t1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

=========================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

-----------------------------------------

|

||||

|0 |SELECT INTO | |0 |37 |

|

||||

|1 | SCALAR GROUP BY| |1 |37 |

|

||||

|2 | TABLE SCAN |t1 |2 |37 |

|

||||

=========================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([T_FUN_COUNT(*)], [T_FUN_MAX(t1.c1)]), filter(nil)

|

||||

1 - output([T_FUN_COUNT(*)], [T_FUN_MAX(t1.c1)]), filter(nil),

|

||||

group(nil), agg_func([T_FUN_COUNT(*)], [T_FUN_MAX(t1.c1)])

|

||||

2 - output([t1.c1]), filter(nil),

|

||||

access([t1.c1]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,执行计划展示中的 outputs \& filters 详细列出了 SELECT INTO 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|----------|---------------------------------------------------------------------|

|

||||

| output | 该算子赋值给变量列表的表达式。 |

|

||||

| filter | 该算子上的过滤条件。 由于示例中 SELECT INTO 算子没有设置 filter,所以为 nil。 |

|

||||

|

||||

|

||||

@ -0,0 +1,79 @@

|

||||

SUBPLAN SCAN

|

||||

=================================

|

||||

|

||||

SUBPLAN SCAN 算子用于展示优化器从哪个视图访问数据。

|

||||

|

||||

当查询的 FROM TABLE 为视图时,执行计划中会分配 SUBPLAN SCAN 算子。SUBPLAN SCAN 算子类似于 TABLE SCAN 算子,但它不从基表读取数据,而是读取孩子节点的输出数据。

|

||||

|

||||

如下示例中,Q1 查询中 1 号算子为视图中查询生成,0 号算子 SUBPLAN SCAN 读取 1 号算子并输出。

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(1,1);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(2,2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE VIEW v AS SELECT * FROM t1 LIMIT 5;

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

Q1:

|

||||

obclient>EXPLAIN SELECT * FROM V WHERE c1 > 0\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

=====================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

-------------------------------------

|

||||

|0 |SUBPLAN SCAN|v |1 |37 |

|

||||

|1 | TABLE SCAN |t1 |2 |37 |

|

||||

=====================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([v.c1], [v.c2]), filter([v.c1 > 0]),

|

||||

access([v.c1], [v.c2])

|

||||

1 - output([t1.c1], [t1.c2]), filter(nil),

|

||||

access([t1.c1], [t1.c2]), partitions(p0),

|

||||

limit(5), offset(nil)

|

||||

```

|

||||

|

||||

|

||||

**说明**

|

||||

|

||||

|

||||

|

||||

目前 LIMIT 算子只支持 MySQL 模式的 SQL 场景。详细信息请参考 [LIMIT](../../../12.sql-optimization-guide-1/2.sql-execution-plan-3/2.execution-plan-operator-2/12.LIMIT-1-2.md)。

|

||||

|

||||

上述示例中,Q1 查询的执行计划展示中的 outputs \& filters 详细列出了 SUBPLAN SCAN 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|----------|-------------------------------------------------------------------|

|

||||

| output | 该算子输出的表达式。 |

|

||||

| filter | 该算子上的过滤条件。 例如 `filter([v.c1 > 0])` 中的 `v.c1 > 0`。 |

|

||||

| access | 该算子从子节点读取的需要使用的列名。 |

|

||||

|

||||

|

||||

|

||||

当 `FROM TABLE` 为视图并且查询满足一定条件时能够对查询进行视图合并改写,此时执行计划中并不会出现 SUBPLAN SCAN。如下例所示,Q2 查询相比 Q1 查询减少了过滤条件,不再需要分配 SUBPLAN SCAN 算子。

|

||||

|

||||

```javascript

|

||||

Q2:

|

||||

obclient>EXPLAIN SELECT * FROM v\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

===================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

-----------------------------------

|

||||

|0 |TABLE SCAN|t1 |2 |37 |

|

||||

===================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([t1.c1], [t1.c2]), filter(nil),

|

||||

access([t1.c1], [t1.c2]), partitions(p0),

|

||||

limit(5), offset(nil)

|

||||

```

|

||||

|

||||

|

||||

@ -0,0 +1,121 @@

|

||||

UNION

|

||||

==========================

|

||||

|

||||

UNION 算子用于将两个查询的结果集进行并集运算。

|

||||

|

||||

OceanBase 数据库支持的 UNION 算子包括 UNION ALL、 HASH UNION DISTINCT 和 MERGE UNION DISTINCT。

|

||||

|

||||

UNION ALL

|

||||

------------------------------

|

||||

|

||||

UNION ALL 用于直接对两个查询结果集进行合并输出。

|

||||

|

||||

如下示例中,Q1 对两个查询使用 UNION ALL 进行联接,使用 UNION ALL 算子进行并集运算。算子执行时依次输出左右子节点所有输出结果。

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT PRIMARY KEY, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(1,1);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(2,2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

Q1:

|

||||

obclient>EXPLAIN SELECT c1 FROM t1 UNION ALL SELECT c2 FROM t1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

====================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

------------------------------------

|

||||

|0 |UNION ALL | |4 |74 |

|

||||

|1 | TABLE SCAN|T1 |2 |37 |

|

||||

|2 | TABLE SCAN|T1 |2 |37 |

|

||||

====================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([UNION(T1.C1, T1.C2)]), filter(nil)

|

||||

1 - output([T1.C1]), filter(nil),

|

||||

access([T1.C1]), partitions(p0)

|

||||

2 - output([T1.C2]), filter(nil),

|

||||

access([T1.C2]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,执行计划展示中的 outputs \& filters 详细列出了 UNION ALL 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|----------|-------------------------------------------------------------------|

|

||||

| output | 该算子的输出表达式。 |

|

||||

| filter | 该算子上的过滤条件。 由于示例中 UNION ALL 算子没有设置 filter,所以为 nil。 |

|

||||

|

||||

|

||||

|

||||

MERGE UNION DISTINCT

|

||||

-----------------------------------------

|

||||

|

||||

MERGE UNION DISTINCT 用于对结果集进行并集、去重后进行输出。

|

||||

|

||||

如下示例中,Q2 对两个查询使用 UNION DISTINCT 进行联接, c1 有可用排序,0 号算子生成 MERGE UNION DISTINCT 进行取并集、去重。由于 c2 无可用排序,所以在 3 号算子上分配了 SORT 算子进行排序。算子执行时从左右子节点读取有序输入,进行合并得到有序输出并去重。

|

||||

|

||||

```javascript

|

||||

Q2:

|

||||

obclient>EXPLAIN SELECT c1 FROM t1 UNION SELECT c2 FROM t1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

=============================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

---------------------------------------------

|

||||

|0 |MERGE UNION DISTINCT| |4 |77 |

|

||||

|1 | TABLE SCAN |T1 |2 |37 |

|

||||

|2 | SORT | |2 |39 |

|

||||

|3 | TABLE SCAN |T1 |2 |37 |

|

||||

=============================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([UNION(T1.C1, T1.C2)]), filter(nil)

|

||||

1 - output([T1.C1]), filter(nil),

|

||||

access([T1.C1]), partitions(p0)

|

||||

2 - output([T1.C2]), filter(nil), sort_keys([T1.C2, ASC])

|

||||

3 - output([T1.C2]), filter(nil),

|

||||

access([T1.C2]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例的执行计划展示中的 outputs \& filters 详细列出了 MERGE UNION DISTINCT 算子的输出信息,字段的含义与 UNION ALL 算子相同。

|

||||

|

||||

HASH UNION DISTINCT

|

||||

----------------------------------------

|

||||

|

||||

HASH UNION DISTINCT 用于对结果集进行并集、去重后进行输出。

|

||||

|

||||

如下示例中,Q3 对两个查询使用 UNION DISTINCT 进行联接,无可利用排序,0 号算子使用 HASH UNION DISTINCT 进行并集、去重。算子执行时读取左右子节点输出,建立哈希表进行去重,最终输出去重后结果。

|

||||

|

||||

```javascript

|

||||

Q3:

|

||||

obclient>EXPLAIN SELECT c2 FROM t1 UNION SELECT c2 FROM t1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

============================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

--------------------------------------------

|

||||

|0 |HASH UNION DISTINCT| |4 |77 |

|

||||

|1 | TABLE SCAN |T1 |2 |37 |

|

||||

|2 | TABLE SCAN |T1 |2 |37 |

|

||||

============================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([UNION(T1.C2, T1.C2)]), filter(nil)

|

||||

1 - output([T1.C2]), filter(nil),

|

||||

access([T1.C2]), partitions(p0)

|

||||

2 - output([T1.C2]), filter(nil),

|

||||

access([T1.C2]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例的执行计划展示中的 outputs \& filters 详细列出了 HASH UNION DISTINCT 算子的输出信息,字段的含义与 UNION ALL 算子相同。

|

||||

@ -0,0 +1,86 @@

|

||||

INTERSECT

|

||||

==============================

|

||||

|

||||

INTERSECT 算子用于对左右孩子算子输出进行交集运算,并进行去重。

|

||||

|

||||

OceanBase 数据库支持的 INTERSECT 算子包括 MERGE INTERSECT DISTINCT 和 HASH INTERSECT DISTINCT。

|

||||

|

||||

MERGE INTERSECT DISTINCT

|

||||

---------------------------------------------

|

||||

|

||||

如下示例中,Q1 对两个查询使用 INTERSECT 联接,c1 有可用排序,0 号算子生成了 MERGE INTERSECT DISTINCT 进行求取交集、去重。由于 c2 无可用排序,所以在 3 号算子上分配了 SORT 算子进行排序。算子执行时从左右子节点读取有序输入,利用有序输入进行 MERGE,实现去重并得到交集结果。

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT PRIMARY KEY, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(1,1);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(2,2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

Q1:

|

||||

obclient>EXPLAIN SELECT c1 FROM t1 INTERSECT SELECT c2 FROM t1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

=================================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

-------------------------------------------------

|

||||

|0 |MERGE INTERSECT DISTINCT| |2 |77 |

|

||||

|1 | TABLE SCAN |T1 |2 |37 |

|

||||

|2 | SORT | |2 |39 |

|

||||

|3 | TABLE SCAN |T1 |2 |37 |

|

||||

=================================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([INTERSECT(T1.C1, T1.C2)]), filter(nil)

|

||||

1 - output([T1.C1]), filter(nil),

|

||||

access([T1.C1]), partitions(p0)

|

||||

2 - output([T1.C2]), filter(nil), sort_keys([T1.C2, ASC])

|

||||

3 - output([T1.C2]), filter(nil),

|

||||

access([T1.C2]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,执行计划展示中的 outputs \& filters 详细列出了所有 INTERSECT 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|----------|------------------------------------------------------------------------------------------|

|

||||

| output | 该算子的输出表达式。 使用 INTERSECT 联接的两个子算子对应输出,即表示交集运算输出结果中的一列,括号内部为左右子节点对应此列的输出列。 |

|

||||

| filter | 该算子上的过滤条件。 由于示例中 INTERSECT 算子没有设置 filter,所以为 nil。 |

|

||||

|

||||

|

||||

|

||||

HASH INTERSECT DISTINCT

|

||||

--------------------------------------------

|

||||

|

||||

如下例所示,Q2 对两个查询使用 INTERSECT 进行联接,不可利用排序,0 号算子使用 HASH INTERSECT DISTINCT 进行求取交集、去重。算子执行时先读取一侧子节点输出建立哈希表并去重,再读取另一侧子节点利用哈希表求取交集并去重。

|

||||

|

||||

```javascript

|

||||

Q2:

|

||||

obclient>EXPLAIN SELECT c2 FROM t1 INTERSECT SELECT c2 FROM t1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

================================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

------------------------------------------------

|

||||

|0 |HASH INTERSECT DISTINCT| |2 |77 |

|

||||

|1 | TABLE SCAN |T1 |2 |37 |

|

||||

|2 | TABLE SCAN |T1 |2 |37 |

|

||||

================================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([INTERSECT(T1.C2, T1.C2)]), filter(nil)

|

||||

1 - output([T1.C2]), filter(nil),

|

||||

access([T1.C2]), partitions(p0)

|

||||

2 - output([T1.C2]), filter(nil),

|

||||

access([T1.C2]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例的执行计划展示中的 outputs \& filters 详细列出了 HASH INTERSECT DISTINCT 算子的输出信息,字段的含义与 MERGE INTERSECT DISTINCT 算子相同。

|

||||

@ -0,0 +1,87 @@

|

||||

EXCEPT/MINUS

|

||||

=================================

|

||||

|

||||

EXCEPT 算子用于对左右孩子算子输出集合进行差集运算,并进行去重。

|

||||

|

||||

Oracle 模式下一般使用 MINUS 进行差集运算,MySQL 模式下一般使用 EXCEPT 进行差集运算。OceanBase 数据库的 MySQL 模式不区分 EXCEPT 和 MINUS,两者均可作为差集运算关键字使用。

|

||||

|

||||

OceanBase 数据库支持的 EXCEPT 算子包括 MERGE EXCEPT DISTINCT 和 HASH EXCEPT DISTINCT。

|

||||

|

||||

MERGE EXCEPT DISTINCT

|

||||

------------------------------------------

|

||||

|

||||

如下示例中,Q1 对两个查询使用 MINUS 进行联接, c1 有可用排序,0 号算子生成了 MERGE EXCEPT DISTINCT 进行求取差集、去重,由于 c2 无可用排序,所以在 3 号算子上分配了 SORT 算子进行排序。算子执行时从左右孩子节点读取有序输入,利用有序输入进行 MERGE, 实现去重并得到差集结果。

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT PRIMARY KEY, c2 INT);

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(1,1);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

obclient>INSERT INTO t1 VALUES(2,2);

|

||||

Query OK, 1 rows affected (0.12 sec)

|

||||

|

||||

Q1:

|

||||

obclient>EXPLAIN SELECT c1 FROM t1 MINUS SELECT c2 FROM t1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

==============================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

----------------------------------------------

|

||||

|0 |MERGE EXCEPT DISTINCT| |2 |77 |

|

||||

|1 | TABLE SCAN |T1 |2 |37 |

|

||||

|2 | SORT | |2 |39 |

|

||||

|3 | TABLE SCAN |T1 |2 |37 |

|

||||

==============================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([MINUS(T1.C1, T1.C2)]), filter(nil)

|

||||

1 - output([T1.C1]), filter(nil),

|

||||

access([T1.C1]), partitions(p0)

|

||||

2 - output([T1.C2]), filter(nil), sort_keys([T1.C2, ASC])

|

||||

3 - output([T1.C2]), filter(nil),

|

||||

access([T1.C2]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,执行计划展示中的 outputs \& filters 详细列出了 EXCEPT 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|----------|----------------------------------------------------------------------------------------------------------------------------------|

|

||||

| output | 该算子的输出表达式。 使用 EXCEPT/MINUS 联接的两孩子算子对应输出(Oracle 模式使用 MINUS,MySQL 模式使用 EXCEPT),表示差集运算输出结果中的一列,括号内部为左右孩子节点对应此列的输出列。 |

|

||||

| filter | 该算子上的过滤条件。 由于示例中 EXCEPT 算子没有设置 filter,所以为 nil。 |

|

||||

|

||||

|

||||

|

||||

HASH EXCEPT DISTINCT

|

||||

-----------------------------------------

|

||||

|

||||

如下示例中,Q2 对两个查询使用 MINUS 进行联接,不可利用排序,0 号算子使用 HASH EXCEPT DISTINCT 进行求取差集、去重。算子执行时先读取左侧孩子节点输出建立哈希表并去重,再读取右侧孩子节点输出利用哈希表求取差集并去重。

|

||||

|

||||

```javascript

|

||||

Q2:

|

||||

obclient>EXPLAIN SELECT c2 FROM t1 MINUS SELECT c2 FROM t1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

=============================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

---------------------------------------------

|

||||

|0 |HASH EXCEPT DISTINCT| |2 |77 |

|

||||

|1 | TABLE SCAN |T1 |2 |37 |

|

||||

|2 | TABLE SCAN |T1 |2 |37 |

|

||||

=============================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([MINUS(T1.C2, T1.C2)]), filter(nil)

|

||||

1 - output([T1.C2]), filter(nil),

|

||||

access([T1.C2]), partitions(p0)

|

||||

2 - output([T1.C2]), filter(nil),

|

||||

access([T1.C2]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例的执行计划展示中的 outputs \& filters 详细列出了 HASH EXCEPT DISTINCT 算子的输出信息,字段的含义与 MERGE EXCEPT DISTINCT 算子相同。

|

||||

@ -0,0 +1,232 @@

|

||||

INSERT

|

||||

===========================

|

||||

|

||||

INSERT 算子用于将指定的数据插入数据表,数据来源包括直接指定的值和子查询的结果。

|

||||

|

||||

OceanBase 数据库支持的 INSERT 算子包括 INSERT 和 MULTI PARTITION INSERT。

|

||||

|

||||

INSERT

|

||||

---------------------------

|

||||

|

||||

INSERT 算子用于向数据表的单个分区中插入数据。

|

||||

|

||||

如下例所示,Q1 查询将值 (1, '100') 插入到非分区表 t1 中。其中 1 号算子 EXPRESSION 用来生成常量表达式的值。

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1 (c1 INT PRIMARY KEY, c2 VARCHAR2(10));

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE TABLE t2 (c1 INT PRIMARY KEY, c2 VARCHAR2(10)) PARTITION BY

|

||||

HASH(c1) PARTITIONS 10;

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE TABLE t3 (c1 INT PRIMARY KEY, c2 VARCHAR2(10));

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE INDEX IDX_t3_c2 ON t3 (c2) PARTITION BY HASH(c2) PARTITIONS 3;

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

Q1:

|

||||

obclient>EXPLAIN INSERT INTO t1 VALUES (1, '100')\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

====================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

------------------------------------

|

||||

|0 |INSERT | |1 |1 |

|

||||

|1 | EXPRESSION| |1 |1 |

|

||||

====================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([__values.C1], [__values.C2]), filter(nil),

|

||||

columns([{T1: ({T1: (T1.C1, T1.C2)})}]), partitions(p0)

|

||||

1 - output([__values.C1], [__values.C2]), filter(nil)

|

||||

values({1, '100'})

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例中,执行计划展示中的 outputs \& filters 详细列出了 INSERT 算子的输出信息如下:

|

||||

|

||||

|

||||

| **信息名称** | **含义** |

|

||||

|------------|----------------------------------------------------------------|

|

||||

| output | 该算子输出的表达式。 |

|

||||

| filter | 该算子上的过滤条件。 由于示例中 INSERT 算子没有设置 filter,所以为 nil。 |

|

||||

| columns | 插入操作涉及的数据表的列。 |

|

||||

| partitions | 插入操作涉及到的数据表的分区(非分区表可以认为是一个只有一个分区的分区表)。 |

|

||||

|

||||

|

||||

|

||||

更多 INSERT 算子的示例如下:

|

||||

|

||||

* Q2 查询将值(2, '200')、(3, '300')插入到表 t1 中。

|

||||

|

||||

```unknow

|

||||

Q2:

|

||||

obclient>EXPLAIN INSERT INTO t1 VALUES (2, '200'),(3, '300')\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

====================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

------------------------------------

|

||||

|0 |INSERT | |2 |1 |

|

||||

|1 | EXPRESSION| |2 |1 |

|

||||

====================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([__values.C1], [__values.C2]), filter(nil),

|

||||

columns([{T1: ({T1: (T1.C1, T1.C2)})}]), partitions(p0)

|

||||

1 - output([__values.C1], [__values.C2]), filter(nil)

|

||||

values({2, '200'}, {3, '300'})

|

||||

```

|

||||

|

||||

|

||||

|

||||

* Q3 查询将子查询 `SELECT * FROM t3` 的结果插入到表 t1 中。

|

||||

|

||||

```unknow

|

||||

Q3:

|

||||

obclient>EXPLAIN INSERT INTO t1 SELECT * FROM t3\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

====================================

|

||||

|0 |INSERT | |100000 |117862|

|

||||

|1 | EXCHANGE IN DISTR | |100000 |104060|

|

||||

|2 | EXCHANGE OUT DISTR| |100000 |75662 |

|

||||

|3 | SUBPLAN SCAN |VIEW1|100000 |75662 |

|

||||

|4 | TABLE SCAN |T3 |100000 |61860 |

|

||||

================================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([VIEW1.C1], [VIEW1.C2]), filter(nil),

|

||||

columns([{T1: ({T1: (T1.C1, T1.C2)})}]), partitions(p0)

|

||||

1 - output([VIEW1.C1], [VIEW1.C2]), filter(nil)

|

||||

2 - output([VIEW1.C1], [VIEW1.C2]), filter(nil)

|

||||

3 - output([VIEW1.C1], [VIEW1.C2]), filter(nil),

|

||||

access([VIEW1.C1], [VIEW1.C2])

|

||||

4 - output([T3.C1], [T3.C2]), filter(nil),

|

||||

access([T3.C2], [T3.C1]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

* Q4 查询将值(1, '100')插入到分区表 t2 中,通过 `partitions` 参数可以看出,该值会被插入到 t2 的 p5 分区。

|

||||

|

||||

```javascript

|

||||

Q4:

|

||||

obclient>EXPLAIN INSERT INTO t2 VALUES (1, '100')\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

====================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

------------------------------------

|

||||

|0 |INSERT | |1 |1 |

|

||||

|1 | EXPRESSION| |1 |1 |

|

||||

====================================

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([__values.C1], [__values.C2]), filter(nil),

|

||||

columns([{T2: ({T2: (T2.C1, T2.C2)})}]), partitions(p5)

|

||||

1 - output([__values.C1], [__values.C2]), filter(nil)

|

||||

values({1, '100'})

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

MULTI PARTITION INSERT

|

||||

-------------------------------------------

|

||||

|

||||

MULTI PARTITION INSERT 算子用于向数据表的多个分区中插入数据。

|

||||

|

||||

如下例所示,Q5 查询将值(2, '200')、(3, '300')插入到分区表 t2 中,通过 `partitions` 可以看出,这些值会被插入到 t2 的 p0 和 p6 分区。

|

||||

|

||||

```javascript

|

||||

Q5:

|

||||

obclient>EXPLAIN INSERT INTO t2 VALUES (2, '200'),(3, '300')\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

===============================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

-----------------------------------------------

|

||||

|0 |MULTI PARTITION INSERT| |2 |1 |

|

||||

|1 | EXPRESSION | |2 |1 |

|

||||

===============================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([__values.C1], [__values.C2]), filter(nil),

|

||||

columns([{T2: ({T2: (T2.C1, T2.C2)})}]), partitions(p0, p6)

|

||||

1 - output([__values.C1], [__values.C2]), filter(nil)

|

||||

values({2, '200'}, {3, '300'})

|

||||

```

|

||||

|

||||

|

||||

|

||||

上述示例的执行计划展示中的 outputs \& filters 详细列出了 MULTI PARTITION INSERT 算子的信息,字段的含义与 INSERT 算子相同。

|

||||

|

||||

更多 MULTI PARTITION INSERT 算子的示例如下:

|

||||

|

||||

* Q6 查询将子查询 `SELECT * FROM t3` 的结果插入到分区表 t2 中,因为无法确定子查询的结果集,因此数据可能插入到 t2 的 p0 到 p9 的任何一个分区中。从1 号算子可以看到,这里的 `SELECT * FROM t3` 会被放在一个子查询中,并将子查询命名为 VIEW1。当 OceanBase 数据库内部改写 SQL 产生了子查询时,会自动为子查询命名,并按照子查询生成的顺序命名为 VIEW1、VIEW2、VIEW3...

|

||||

|

||||

```unknow

|

||||

Q6:

|

||||

obclient>EXPLAIN INSERT INTO t2 SELECT * FROM t3\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

==============================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

--------------------------------------------------

|

||||

|0 |MULTI PARTITION INSERT| |100000 |117862|

|

||||

|1 | EXCHANGE IN DISTR | |100000 |104060|

|

||||

|2 | EXCHANGE OUT DISTR | |100000 |75662 |

|

||||

|3 | SUBPLAN SCAN |VIEW1|100000 |75662 |

|

||||

|4 | TABLE SCAN |T3 |100000 |61860 |

|

||||

==================================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([VIEW1.C1], [VIEW1.C2]), filter(nil),

|

||||

columns([{T2: ({T2: (T2.C1, T2.C2)})}]), partitions(p[0-9])

|

||||

1 - output([VIEW1.C1], [VIEW1.C2]), filter(nil)

|

||||

2 - output([VIEW1.C1], [VIEW1.C2]), filter(nil)

|

||||

3 - output([VIEW1.C1], [VIEW1.C2]), filter(nil),

|

||||

access([VIEW1.C1], [VIEW1.C2])

|

||||

4 - output([T3.C1], [T3.C2]), filter(nil),

|

||||

access([T3.C2], [T3.C1]), partitions(p0)

|

||||

```

|

||||

|

||||

|

||||

|

||||

* Q7 查询将值(1, '100')插入到非分区表 t3 中。虽然 t3 本身是一个非分区表,但因为 t3 上存在全局索引 idx_t3_c2,因此本次插入也涉及到了多个分区。

|

||||

|

||||

```javascript

|

||||

Q7:

|

||||

obclient>EXPLAIN INSERT INTO t3 VALUES (1, '100')\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

==============================================

|

||||

|ID|OPERATOR |NAME|EST. ROWS|COST|

|

||||

-----------------------------------------------

|

||||

|0 |MULTI PARTITION INSERT| |1 |1 |

|

||||

|1 | EXPRESSION | |1 |1 |

|

||||

===============================================

|

||||

|

||||

Outputs & filters:

|

||||

-------------------------------------

|

||||

0 - output([__values.C1], [__values.C2]), filter(nil),

|

||||

columns([{T3: ({T3: (T3.C1, T3.C2)}, {IDX_T3_C2: (T3.C2, T3.C1)})}]), partitions(p0)

|

||||

1 - output([__values.C1], [__values.C2]), filter(nil)

|

||||

values({1, '100'})

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@ -0,0 +1,45 @@

|

||||

TABLE LOOKUP

|

||||

=================================

|

||||

|

||||

TABLE LOOKUP 算子用于表示全局索引的回表逻辑。

|

||||

|

||||

示例:全局索引回表

|

||||

|

||||

```javascript

|

||||

obclient>CREATE TABLE t1(c1 INT PRIMARY KEY, c2 INT, c3 INT) PARTITION BY

|

||||

HASH(c1) PARTITIONS 4;

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>CREATE INDEX i1 ON t1(c2) GLOBAL;

|

||||

Query OK, 0 rows affected (0.12 sec)

|

||||

|

||||

obclient>EXPLAIN SELECT * FROM t1 WHERE c2 = 1\G;

|

||||

*************************** 1. row ***************************

|

||||

Query Plan:

|

||||

| ========================================

|

||||

|ID|OPERATOR |NAME |EST. ROWS|COST |

|

||||

----------------------------------------

|

||||

|0 |TABLE LOOKUP|t1 |3960 |31065|

|

||||

|1 | TABLE SCAN |t1(i1)|3960 |956 |

|